Fetch performance of various .NET ORM / Data-access frameworks

Update:

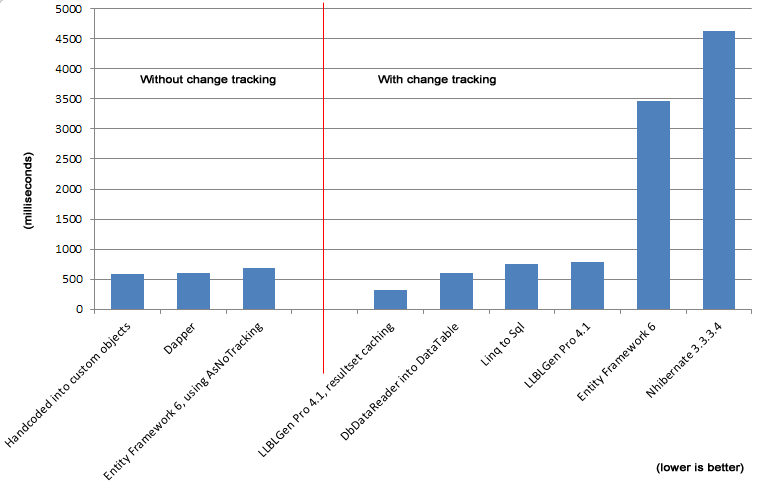

I've added an additional test result, namely for Linq to Sql with change tracking switched off (in the answers, at the bottom of the article). I also have updated the graph so it's now partitioned: the frameworks which do change tracking and the ones which don't do change tracking are now grouped together. DbDataAdapter with DataTable is added to the change tracking set, as a DataTable does change tracking.

Original post:

I've thought long and hard if I should blog about this, but in the end I decided it would benefit everyone if I would post about this and would be as open as possible. This article is about fetch performance, namely how quickly can a given ORM or Data-access framework fetch a given set of data from a database into a set of objects. The results presented here are obtained using publically available code which is run on a specific setup which is explained below. I'll go deeper into the results as well and will try to counter some obvious remarks which are always made when benchmarks are presented.

I'll stress again: this benchmark is solely a result of fetch performance measurement, using simple queries which should barely take any time in the DB itself. If your favorite framework gets slaughtered here because it's very slow, and you simply don't believe it, you can run the tests yourself by cloning the repository linked above.

One thing to keep in mind as well is that the actual raw numbers given are unique for the test setup below here in my office. That's OK, as the actual raw numbers aren't that important; what's important is how the frameworks relate to one another. If the hardware is very fast, the numbers will be lower, but the slowest framework will still be the slowest and the fastest framework will still be the fastest and with the same relative margins.

The setup

As this benchmark is about measuring fetch performance, the code ran to fetch data should not be constrained by the database taking away cycles on the system. Therefore the benchmark obtains the data from a database on the network. Nothing used in the setup is particularly new or high-end, except the code of the frameworks of course. Whether a part is not high-end isn't really important either, as every tested framework has the same elements to work with: the same network, the same database, the same .NET framework, the same hardware.

Server: Windows XP, SQL Server 2005, running in a VM with 1 CPU and 1.5GB Ram on VMWare server on a Core2Duo box.

Client: Windows 8.0 32bit, .NET 4.5.1 on a Core2Quad @ 2.4ghz with 4GB ram

Network: 100BASE-T

I admit, that's not state-of-the-art material, but I assure you, it doesn't matter: all frameworks have to work with this setup, and slowness of a framework will still show itself, relative to the others. If framework X is 5 times slower than fetching the data using a DataTable, it will still be 5 times slower than that on the fastest hardware, as the DataTable fetch will also be much faster on the fastest hardware.

The bottlenecks in the frameworks will show their ugly heads anyway, if the hardware is the latest and greatest or a VM. For the people wondering why I picked XP, it's my old dev partition which I transformed into a VM to keep the complete environment in tact for support for older LLBLGen Pro versions, in this case v1 and v2, and it happens to have a good AdventureWorks example DB installed. The query spends barely 2ms in the DB, so it's more than capable to serve our tests.

As database I've chosen to use AdventureWorks on SQL Server. It has a reasonable amount of data to work with, and more than a couple of tables, so it is not a simple demo / mickey mouse database but closer to a database used in real-life scenarios.

The test consists of fetching all 31465 rows from a single table, SalesOrderHeader. This table was chosen because it has more than a few columns and a variety of types, so the materializers have to work more than with a 3-column table with only integer values. It also has a couple of relationships with other tables which makes it a good candidate to see whether the ORM used has a problem with relationships in the context of a set fetch when entities were mapped on these tables.

I also chose this table because the amount of data isn't that small but also not massive either, so a good average test set for performance testing: if there are slow parts in the fetch pipeline of a framework, this kind of entity will put the spotlight on them easily.

All tests were run on .NET 4.5.1 using release builds. No ngen was used, just the default assemblies. The database was warmed up as well as the CLR with fetches which results were thrown away. For the averages the fastest and the slowest times were discarded.

The included ORM / Data-access frameworks

I've included the following ORM / Data-access frameworks and have specified my reasons why:

- LLBLGen Pro v4.1. Reason for inclusion is because I wrote it and this benchmark was started to see how fast my fetch logic improvements were stacking up against the competition.

- Entity Framework v6, latest release build from nuget. Reason for inclusion is because Microsoft presents it as the standard for data-access in .NET so it's fair to include it to see whether it is indeed the standard to beat when it comes to fetch performance.

- NHibernate v3.3.3.4, latest release build from nuget. Reason for inclusion is because still a lot of people use it and it was once the most used ORM on .NET.

- Linq to Sql. Latest version included in .NET 4.5.1. Reason for inclusion is because it was Microsoft's first ORM shipped for .NET and still a go-to data-access framework for many people as it's simple and highly optimized.

- Dapper. Version from October 2013. Reason for inclusion is that it is considered (one of) the fastest Micro-ORMs on .NET.

- DbDataAdapter with DataTable. Latest version included in .NET 4.5.1. Reason for inclusion is because it's a core part of how to do data-access in .NET for a lot of developers still, who work with typed datasets and stored procedures, and also because of it's speed of fetching sets of tabular data.

- Hand-coded fetch with DbDataReader and a hand-written POCO. Latest version of the DbDataReader code included in .NET 4.5.1. Reason for inclusion is because it shows how fast hand-written, hand-optimized code is and thus in theory how much performance a full ORM framework spills elsewhere on features, overhead or slow code in general.

The DataTable and hand-written fetches are more or less guidelines to see what's to expect and how close the other frameworks come to these two's results. They give a good insight in what's possible and thus show that e.g. expecting way faster fetches is not reasonable as there's not much more to gain than a tight loop around a DbDataReader. In the results you'll see that Dapper did manage to get very close and in the raw results you'll see it sometimes was faster, which is a remarkable achievement.

All used ORM frameworks have the full AdventureWorks model mapped as entities, all tables have a corresponding entity class mapped and all relationships are modeled as well. No inheritance is modeled, as not all of the frameworks support it (there are some inheritance relationships present in the AdventureWorks schema) and the used entity isn't in an inheritance hierarchy so it didn't make much sense to add it.

The results

The raw results are located here. I've replicated the average times below in tabular and graph form. Each operation consisted of fetching 31465 entities from the database with one query and materialize these in individual objects which were stored into a collection. The average times given are the averages of 10 operations per framework, where the slowest and fastest operation were ignored.

| ORM / Data-access framework | Average time (ms) |

| Entity Framework v6 | 3470.22 |

| Entity Framework v6 with AsNoTracking() | 689.75 |

| Linq to Sql | 746.88 |

| LLBLGen Pro v4.1 | 786.89 |

| LLBLGen Pro v4.1 with resultset caching | 311.50 |

| Dapper | 600.00 |

| NHibernate 3.3.3.4 | 4635.00 |

| Hand-coded into custom objects | 587.00 |

| DbDataReader into DataTable | 598.38 |

The results are not pretty, at least not for Entity Framework and especially not for NHibernate. The micro-ORM Dapper and the DataTable / hand-written fetch code are very fast, as expected, all ORMs are slower than those three. The resultset caching result, which by far the fastest, fetches the resultset once and then re-materializes the objects from that set, so it still loops through the raw rows obtained from the database to materialize new entities, it just doesn't have to go to the DB again and doesn't suffer from the network / DB latency.

A couple of things stand out. I'll address them below.

- NHibernate is extremely slow with fetching a set of entities. When I add just one entity type to the model (SalesOrderHeader) instead of the full model, average is around 1500ms, so there's definitely something going on with respect to having a model with relationships like AdventureWorks and fetching entities in NHibernate. I did a profile run to see whether I made a terrible mistake somewhere or that the NHibernate team messed up big time. The short answer is: it's not me. See below for more details.

- Entity Framework is also extremely slow. I reported this to the Entity Framework team some time ago. They have acknowledged it and have created a workitem for this. It turns out that this issue is also present in Entity Framework 5. When there's just 1 entity in the model, Entity Framework manages to get close to 1100ms average, so similar to NHibernate there's something going on with respect to relationships in a model and fetching. The AsNoTracking call bypasses all benefits of the ORM and simply converts the rows into objects, like Dapper does, and shows what potential speed Entity Framework has under the hood, if it doesn't have to do anything expected from a good ORM.

- Linq to Sql and LLBLGen Pro are close. I am very pleased with this result, considering the amount of work I've spent on optimizing the query pipeline in the past years. I'm also pleased because the amount of features our framework has doesn't make it much slower than a framework which offers much less, like Linq to Sql. To materialize an entity, LLBLGen Pro has to do the following for each row:

- call into the Dependency Injection sub system to inject objects

- call into a present authorizer to test whether the fetch of this row is allowed

- call into a present auditor to log the fetch of a given row

- call into the uniquing sub system to make sure no duplicates are materialized

- call into the conversion sub system to convert any db values to specific .net types if type conversions are defined.

- call into the string cache to avoid duplicate instances of the same string in memory to avoid memory bloat.

- create a new entity class instance and store the values of the entity into it.

What's also interesting (not shown) is that if there's just one entity in the Linq to Sql model, it's very close to Dapper's speed. So a bigger model also causes a speed penalty there, however not as big as with Entity Framework or NHibernate. - With a somewhat slow connection to the DB, it can be efficient to cache a resultset locally. With faster network connections, this is of course mitigated to a certain point.

Answers to some expected remarks / questions

Below I'll try to answer as many questions you might have after reading this as well as some remarks which are to be expected. This isn't my first benchmark I'm doing in the long time I'm writing ORM frameworks.

"You're biased!"

The framework I work full time on is indeed included in the test. Though I didn't skew the test to make it win as I picked a reasonable scenario which will show performance bottlenecks in code used in a lot of LoB apps every day. I also disclosed the full sourcecode archive on github so you can see for yourself what I used for testing and whether I made terrible mistakes.

"Would you have published the results if your own framework was dead last?"

I don't think so, I am honest about that. But perhaps another person would have. This benchmark was started to see whether a prototype I made had a chance to be successful; LLBLGen Pro v3.5 code was much slower than the results offered by v4.1 (around 1280ms on average), and I was pleased with the work done to make fetching data much faster in v4.0. It took months to get there, but the changes were worth it: from 1280 on average to 790 on average was better than I'd have hoped for. To show the world what my hard work had resulted in, I published these results, to inform database using developers that reality is likely different from what they think it is.

"Why this particular test? No-one else tests this"

This test is nothing new. In the earlier days of .NET ORMs we used these kind of fetch benchmarks all the time, especially because earlier on Microsoft pushed typed datasets and stored procedures which leaned a lot on the tabular data fetch performance of the DbDataAdapter. To get close to that performance as an ORM, one had to optimize the pipeline a lot. It's showing that Entity Framework and also NHibernate teams have gotten lazy in this respect that they never tested fetch performance of their frameworks in this way. A solid, good fetch performance is essential for an ORM to be taken seriously by a developer as every bottleneck in an ORM will be a potential bottleneck in the application using it. Performance testing is therefore key for good quality control. Personally I'm very surprised that the two biggest ORMs on .NET perform so poorly.

"I never fetch that much entities, so it doesn't apply to me"

Actually it does. While you might not fetch 31000 entities in one go, but 10000 times 3 entities gives you the same performance degradation: if your application does a lot of fetching, every time a fetch is performed it slows the application down if the fetch itself is slow. If this happens in an application which is relying on good data-access performance, it can bog down the application under load if the data-access performance is sub-par or downright poor, when it didn't have to be.

"Linq to Sql also has a mode where you can switch off change tracking"

Linq to Sql with changetracking disabled was determined after the rest of the article was already written so it was unfair to add that as-is without updating all the numbers again, so instead I'll mention briefly these numbers here: new run, Linq to Sql normal: 738.63ms, Linq to Sql, no change tracking: 649.25ms.

"What should I learn from these results?"

Firstly you can see what the relative performance is of framework X compared to framework Y in this particular situation of fetching a set of entities. Secondly, you can see that there is a hard limit on what performance you can expect from a data-access framework: reading data into entity objects takes time, no matter what you use. This also means that if your application needs to read data faster than e.g. Dapper can give it to you, you likely will not be able to solve that problem in a regular way. Thirdly, if your application feels 'slow' when obtaining data from the database while you're using a framework which is actually pretty fast with fetching data, it might very well be the slowness is elsewhere and not in the data-access / ORM framework used.

"I wrote my own ORM, it's likely faster"

I'm doing this now for a very long time and I've dealt with many developers who wrote ORMs in the past decade or so. Unless you've invested a lot of time in optimizing the fetch pipeline, chances are you made all the rookie mistakes we all made in the beginning. At least that's what I've learned and seen in the past 10-12 years writing ORMs for .NET. But if you're confident, fork the github code and add your solution to it so others can see how quick your code is.

"You didn't include caching for NHibernate"

I indeed didn't as the test wasn't to show how fast caching is in various systems, but how fast entity materialization is. An entity cache could help with NHibernate but it wouldn't make the slow performance of fetching entities any less slow. I included the resultset caching results of LLBLGen Pro to show how fast it is in this context as it does materialize entities again, but it's not the main focus of the benchmark.

"NHibernate is very fast, you messed up somewhere"

Well, I knew they weren't the fastest, but I too didn't expect them to be this slow. So to be certain it wasn't me who made a terrible mistake somewhere, I profiled the NHibernate query execution using dotTrace 4.5.1. One look at the profile results explains it: the code internally is so over-engineered, there's no real overview what's going on as it calls code all over the place and it calls a lot of methods a lot of times: there are 31K entities in this set, yet it manages to call a rather expensive method over half a million times. Here's a screen shot of the trace. It's a 'warm call', so pure fetching, no mapping file import done anymore: (click to see a full image)

Having high performance means you have to engineer for that too. It doesn't come out of the box. If you never profile your code, you don't know what's going on at runtime, especially with code that's so fragmented in methods all over the place like with NHibernate. If someone from the NHibernate team wants the dotTrace profile snapshot file (15MB), let me know.

"You made a mistake with framework X"

Please show me what I did wrong and send me a pull request so I can correct it.

"Did you take into account start-up times of framework X?"

Yes, the slowest and fastest time for any framework are ignored, so startup time isn't taken into account in the averages.

"Why didn't you include framework Y? It's way faster!"

I tried to include the most used frameworks, and there are not a lot left anymore. I could have added some more but I leave that to the owners of the frameworks to do so via the github repository.

"Isn't it true that the less features you have, the faster you can be?"

Fetching entities fast is about a couple of things: creating an object to store data in should be very fast, and overhead per row and per field value should be very low. It's tricky to get all three down to the bare minimum in generic code but one way to do it is to do very little or nothing at all per row or per field. As soon as you add more features, each row is affected and this adds up. This is then extrapolated into the results of fetching many rows. So having less features means less overhead per row means faster fetch speeds. This doesn't mean a fast framework is feature-less, though it might. I know that I will never get LLBLGen Pro as fast as Dapper, simply because Dapper does way less things than LLBLGen Pro does with each entity materialization cycle, but I try ![]()

"Lies, damn lies and benchmarks"

True, benchmarks often turn out to be equal to lies or marketing if they're given without a context, without all the surrounding information about what's exactly benchmarked, and why. As I mentioned earlier, the results in this test are not usable without the context in which they're created: it's a single test, highlighting a single feature, performed on a single setup. The numbers only have meaning with respect to that setup, however, the relative performance differences are usable outside the setup: Entity Framework is ~5 times slower than LLBLGen Pro when it comes to fetching sets of entities, however how slow exactly in milliseconds depends on the particular setup your application runs on.

I hope this all helps developers out there open their eyes how fast some of the frameworks out there really are with respect to entity fetch operations. If you have more questions, please post them in the replies so I can answer them.