Contents tagged with Agile

-

Testing Castle Windsor Mappings Part Deux

In my original post on testing Windsor Container mappings, I posted a spec to run whenever you are using Castle Windsor in your project. It basically ran through your configuration file and ensured all the mappings worked. This was meant to be a safety net to catch a rename or namespace move in the domain (which wouldn't update the configuration file).

It worked pretty good and has helped us catch silly errors but we were getting pain with mappings on classes like this:

public partial class FinderGrid<T> : UserControl, IFinderGrid<T> where T : Entity

{}

Generics were a funny thing so you get an error like this:

System.ArgumentException: GenericArguments[0], 'T',

on 'UserInterface.FinderGrid`1[T]' violates the constraint of type 'T'.

---> System.TypeLoadException: GenericArguments[0], 'T',

on 'UserInterface.FinderGrid`1[T]' violates the constraint of type parameter 'T'.

at System.RuntimeTypeHandle._Instantiate(RuntimeTypeHandle[] inst)

at System.RuntimeTypeHandle.Instantiate(RuntimeTypeHandle[] inst)

at System.RuntimeType.MakeGenericType(Type[] instantiation)

--- End of inner exception stack trace ---

at System.RuntimeType.ValidateGenericArguments(MemberInfo definition, Type[] genericArguments, Exception e)

at System.RuntimeType.MakeGenericType(Type[] instantiation)

at Castle.MicroKernel.Handlers.DefaultGenericHandler.Resolve(CreationContext context)We put an exception handler around the test, but it was butt ugly. After some posting on the Castle mailing list and discussion with a few people I came up with a way to handle any generic mapping you have. Here's the updated spec that will deal with (hopefully) any mapping:

[TestFixture]public class When_starting_the_application : Spec

{[Test]public void Verify_Windsor_Container_mapping_configuration_is_correct()

{IWindsorContainer container = new WindsorContainer("castle.xml");

foreach (IHandler handler in container.Kernel.GetAssignableHandlers(typeof (object)))

{if (handler is DefaultGenericHandler)

{Type[] genericArguments = handler

.ComponentModel.Service.GetGenericArguments();foreach (Type genericArgument in genericArguments)

{Type[] genericParameterConstraints =

genericArgument.GetGenericParameterConstraints();

foreach (Type genericParameterConstraint in genericParameterConstraints)

{container.Resolve(

handler.ComponentModel.Service.MakeGenericType(genericParameterConstraint));

}

}

}

else{container.Resolve(handler.ComponentModel.Service);

}

}

}

}

This will handle any mapping with constraints and the name isn't tied to your test (originally I found one that worked but I had to specify the generic type when trying to set the constraint). This is a 2.0 solution and not as pretty as it could be with LINQ and 3.5 but it works.

Hope this helps!

P.S. Here's a silly creepy version for those that dig anonymous delegates to the extreme. Lamdbas would just make most of this syntax sugar go away:

[Test]public void Verify_Windsor_Container_mapping_configuration_is_correct()

{IWindsorContainer container = new WindsorContainer("castle.xml");

foreach (IHandler handler in container.Kernel.GetAssignableHandlers(typeof (object)))

{if (handler is DefaultGenericHandler)

{new List<Type>(handler

.ComponentModel.Service.GetGenericArguments()).ForEach(delegate(Type argument)

{new List<Type>(argument.GetGenericParameterConstraints())

.ForEach(delegate(Type constraint)

{container.Resolve(handler.ComponentModel.Service.MakeGenericType(constraint));

}

);

}

);

}

else{container.Resolve(handler.ComponentModel.Service);

}

}

-

ADO.NET Enity Framework Vote of No Confidence

Over the past year or two, I've been a casual observer into the Entity Framework coming out of Microsoft. Being an ALT.NET guy, the world tends to revolve around NHibernate for me so I've already got an excellent OR/M tool in my toolset. One of the big issues with EF that we've recognized is the general direction Microsoft has taken with it, following a data centric model rather than an object one. One of the first principles I picked up when I started doing OO programming (back in the SmallTalk days in the 80s) was that objects are defined by behavior, not their properties. Yes it's true that objects are pretty thin without data, but data is not my centre of the universe.

Over the past year or two, I've been a casual observer into the Entity Framework coming out of Microsoft. Being an ALT.NET guy, the world tends to revolve around NHibernate for me so I've already got an excellent OR/M tool in my toolset. One of the big issues with EF that we've recognized is the general direction Microsoft has taken with it, following a data centric model rather than an object one. One of the first principles I picked up when I started doing OO programming (back in the SmallTalk days in the 80s) was that objects are defined by behavior, not their properties. Yes it's true that objects are pretty thin without data, but data is not my centre of the universe.What we see on the horizon is a new breed of VB6 drag-n-drop programmers embracing EF as the next Messiah. We see a new generation of developers focused on mapping their data models and missing the target of architecting and constructing well designed systems. As a result, the community has put together an open letter to Microsoft outlining these concerns. The letter outlines the deficiencies in the EF specifically related to the values we see as solid working practices. It's late to the game and Microsoft probably isn't going to make any sweeping changes so close to the release so don't expect any big short-term changes however as Dave Laribee says, it's good to be explicit and professional about criticisms so this is one of those.

What's interesting too is that Microsoft has put tgoether what they call the Data Programmability Advisory Council, a team of notable people including Eric Evans, Martin Fowler, and Jimmy Nilsson (all very non-data centric guys in their own right). I'm not quite sure what they will do or how they fit into the entire fray but it might be a step in the right direction (whatever that direction may be).

You can view the entire letter here where you can sign at the bottom to show your support and you can view the list of signatories here.

-

The First Spec You Should Write When Using Castle

Thought this might be useful. On a new project where you're using the Castle Windsor container for Dependency Injection, this is a handy spec to have:

[TestFixture]public class When_starting_the_application : Spec

{[Test]public void verify_Castle_Windsor_mappings_are_correct()

{IWindsorContainer container = new WindsorContainer("castle.xml");

foreach (IHandler handler in container.Kernel.GetAssignableHandlers(typeof(object)))

{container.Resolve(handler.ComponentModel.Service);

}

}

}

It doesn't guarantee that someone missed adding something to your configuration, but this way anytime someone adds a type to the configuration this will verify the mapping is right. Very often I move things around in the domain into different namespaces and forget to update Castle. I supposed you *could* use reflection on your assembly as another test and verify the mapping is there, but not every type in the system is going to be dependency injected so that's probably not feasible.

Thanks to the Castle guys for helping me get the simplest syntax going for this.

-

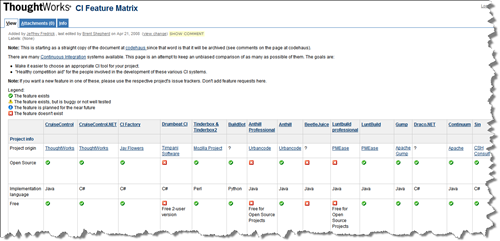

Continuous Integration Feature Matrix

This is just a post to direct people to the CI Feature Matrix that ThoughtWorks maintains. If you're up in the air about choosing a CI system, then this is the page for you. They maintain an unbiased view (their words, not mine) of all the CI systems out there (and there are a lot of them). So if you're wondering or looking for something, check it out.

Note: TW says the page is unbiased and I believe them, however when anyone puts together a matrix like this sometimes they tend to include features that only *your* system has (so you can create a checkmark in that column) that no other system can measure up to. I don't feel TW did this here, but I do want to point out this for making your own unbiased comparison.

If anything, the matrix can be used as an idea generator. Wherever you see a red

and the project is open source, maybe it's time to sit down and write a plugin/patch/add-on and contribute! Think about it and give it some consideration, it would only make these projects even better with more capabilities.

and the project is open source, maybe it's time to sit down and write a plugin/patch/add-on and contribute! Think about it and give it some consideration, it would only make these projects even better with more capabilities. -

First Looks: Mingle 2.0

I have Mingle 2.0 upgraded in our test environment and have been going through the new features, upgrade woes, and some remarks from the peanut gallery. Here's the rundown on this Agile planning tool.

Upgrading

Upgrading was a bit of a pain. To do the test I backed up our Mingle db and restored it to sandbox database on the same MySQL instance and installed a clean copy of Mingle 1.1. Then upgraded 2.0 over top of it (once the 1.1 was working with the new db).

Mingle didn't know what port I originally installed on (my test install was on 888) and defaults to 8080. This can be confusing to a user who's installing an upgrade and didn't perform the original install or doesn't know what port was originally used.

I have unlocker running and it briefly kicked in on some .rb file (it flashed by so quickly you couldn't tell what it was). Didn't seem to be a problem but the Mingle upgrade killed off a whole bunch of processes running on my desktop. For example WinZip, Unlocker, and my anti-virus were all killed off (which might explain the brief flash of Unlocker as it went down) during the install. I know it's "traditional" to shut down all running processes during an install of something new, but I think it's a little over the top to shut them down for you (and especially since it did it without warning)

After the install browsing to localhost:888 failed. I checked the logs and found it had a problem trying to add a column to the db that was already there. After a 10 minute restore/reset (with a couple of well-placed reboots after each install) the install finally worked.

It was painful and luckily I was working on a test database. For sure I recommend doing a backup and upgrade over a temporary working database. Then if all goes well, backup your production db and do the upgrade (backing out if it doesn't work). Don't get too torqued if the browse to the instance doesn't work after the upgrade, just reboot the server (I know, pretty severe) and it should be all fine when you get back.

All in all, the upgrade wasn't horrible. You'll probably want/need to go in and make some mass changes to cards and stories in play in order to leverage the new features but it's fairly quick and painless with the Web 2.0 UI they've built on.

For sure check out the Mingle forums on upgrading/installing as there are a few people trying it on different systems and experiencing various pain points.

New Project Creation

The new project creation screen is basically the same. They have upgraded the Agile hybrid, Scrum, and Xp templates to version 2.0 (but only left the Xp 1.1 template, not sure why here). A minor change in the UI in 2.0 is they added a header/footer with the "Create Project | Cancel | Back to project list" links which is handy.

Project Admin

There's some minor shifts in project admin that are both cosmetic and functional. The Project Settings screen now has the SVN repository info separated out and adds a new field, Numeric Precision. This lets you deal with precision in your numbers on cards, stories, etc. By default it's set to 2 but you can increase it if you need it. I don't recall seeing this as a high priority feature but whatever. It's there now.

Like I said, the Project Repository settings (for integration into source control) has been pulled out into it's own screen. This is for good reason. The first thing you do is pick the version control system you're using from a drop down. Only Subversion is supported in this release, but you can see where it's going (perhaps with support from 3rd party providers). Somewhere in my browsing today I saw TW announce a future release to incorpoate Clear Case or some other SCM so others won't be far behind.

They've introduced the notion of "Project variables". Think of NAnt properties or something that can be used in cards or views. For example you can create a project variable called "Current Release" and give it a value of "1" or "3.2 GA" or whatever (with various data types including numeric, text, date, etc.). Wherever you use this it'll just replace that value. Then you can change en-mass "3.2 GA" to "4.0 RC1" or something and anywhere it's being used it gets swapped out.

The new advanced admin feature is recaluating project aggregates. We'll talk about aggregates later but if you find the numbers might be out of whack, go to Advanced prroject admin to recalculate them.

In 1.1, any view could be saved. From the "Saved views & tabs option" you could take a view a make a tab out of it. Now the feature is called "Favorites & tabs". Favorites are saved views that have not been added as tabs and there's two tables here to show you tabs vs. views. Tomatoe, tomatoe.

Card trees are available to edit or delete so let's talk about this in-depth.

Card trees

Card trees let you define a heirachy that works for your system. You can check out a video here that explains it well. For example, tasks can roll up under stories that roll up into features that roll up in epics. This is the ultimate in flexibility and lets you move things around as sets. There's a new Card explorer that lets you drag and drop cards from the right hand flyout so you can quickly (and visually) move your cards around in the view.

This is great and how I work. I usually break a system down by epics which then might flow into features which are made up of stories (I personally don't like getting down to the task level but YMMV). Now I can lay my project out visually and see where everything fits in and this lets me do things like track stories against a feature or bugs against a story. The notion of Done, Done, Done gets much clearer with Mingle 2.0.

Aggregates

In addition to Card trees there are attributes in cards trees called Aggregates that will allow you to roll up information into swimlanes. For example I can sum up all the story points in a feature or functional areas and in the Grid display, show that value. At a glance I see how many points I can deliver for that group. This is great for say release planning where you create a plan showing the sum of all points for each story in the sprint. Knowing your velocity of say 12, you know you can't drag more than 12 points into a sprint. Nice.

The UI is improved and starts to border on a video game like approach to Agile planning. If you drag an aggregate root, all it's children will follow. This makes for easily positioning things on the screen and moving things around, and is pretty fun to watch. Also I would hope a future feature will be a PNG or JPEG export of the tree (much like the image export from Visual Studio's class designer) as you might need an image for documentation or discussion where you don't have online access to Mingle.

Configuration

There's a new option on the main screen, configure email settings. This allows you to change where you SMTP server is and who the email comes from and includes a test link. A huge improvement over having to hunt for the config file and edit it by hand. I know screens like this start bloating out the product which is very lean, but I feel it's better served to have configuration this way rather than 100 text files buried in the file system somewhere. And the test feature is nice as it helps you as you go.

Templates

I didn't get a chance to look at all the templates but the updates to the include some new transitions. Transitions are one of the lesser-known features of Mingle and lets you set up a pseudo-workflow for Cards. In the new Scrum 2.0 template for example there are transitions that let you do a single click "Complete Development" or "Soft Delete". Transitions have filters and constraints (for example you can only invoke a transition if the card type is a Story and was created Today) and just make it easier to use Mingle. Check out the ones in the new templates and create your own. The new Scrum template includes a new dashboard (the Overview page) with story metrics (project status by points) and new graphs like a burndown chart and % of completed tasks per story. These use the new aggregate functions and quite useful to get a quick overview of the project.

Overall

Overall I'm happy with the upgrade. Even though it was a little painful and didn't work initially, in the end it's for the better. The heirachial cards feature is great and there are lots of nice little improvements everywhere (for example the consistent command bar on forms) that make this product even more useful for Agile planning. They spoke of better documentation and I'm looking to integrate Mingle with LDAP. I see there's a new LDAP configuration page but like most Mingle documenation, it's just a rehash of what you might see on the screen or lines in a config file with no real explanation of what is valid and what isn't.

I guess it's part trial-and-error, part knowledge, but I had hoped for more detailed documentation. Perhaps in the future they'll provide something like a wiki interface to the documentation and allow contributions from users to improve the readability of topics and additions of scenarios. To me, that's of the best things with projects like MySQL and PHP (and to a lesser extent the MSDN documentation). Hopefully TW will follow in these footsteps.

With the short release cycles ThoughtWorks employs I don't have to wait a year to see new improvements to a overall good product. Well done guys!

For a list of the top 10 new features in Mingle 2.0, check out this page by ThoughtWorks. Happy upgrading!

-

Being Agile is our favorite thing

When ThoughtWorkers watch too many Julie Andrews movies:

-

Unit Test Projects or Not?

It's funny how the world works. A butterfly flaps it's wings in Brazil, and a tornado forms in Texas 1,000 miles away. Phil Haack posted a poll about unit test project structure and asked the very question we've come to on our current project. Should unit tests belong in their own project or as part of the system? I was going to post a comment on Phil's entry, but figured I would drag my explanation and description out to a full post here.

It's funny how the world works. A butterfly flaps it's wings in Brazil, and a tornado forms in Texas 1,000 miles away. Phil Haack posted a poll about unit test project structure and asked the very question we've come to on our current project. Should unit tests belong in their own project or as part of the system? I was going to post a comment on Phil's entry, but figured I would drag my explanation and description out to a full post here.In the past I've *always* created a separate test project. Tree Surgeon by default does this (and now I'm looking at adding an option to let you decide at code generation time) and most projects I know of work this way. You create your MyApp.Core project (containing your domain logic) and a MyApp.Test project with all the unit tests. More recently I've been creating MyApp.Specs project but that's just a different evolution.

In the next project we're working on, we're looking to shift this approach. A shift to include unit tests in our MyApp.Core project. Here's some reasons and thinking behind it.

With unit tests (or specifications) in a separate project you end up mimicking the structure of your domain and create a namespace hierarchy. By default .NET assemblies have a default namespace for your application and then the name of any folder in the project is appended to the default namespace. So if your assemblies default namespace is MyApp.Core (and the namespace defaults to the name of the assembly) and you create a folder called Customer, all classes in that folder will be in the MyApp.Core.Customer namespace. In your test project you have a similar thing and usually you'll have the default namespace to be MyApp.Test (the name of the assembly).

Since there is only one test assembly (assuming you don't break them up that is) then you don't necessarily want to create a folder called CustomerSpecs (or CustomerTests or even Customer) so you might create a folder called Domain. After all, you're unit testing the domain but then they'll be the UI, Presenters, Factories, Data Access, etc. Do you create a separate test assembly for all of these? Probably not.

Let's see, we have an assembly (MyApp.Core), a class (Customer) in a namespace (MyApp.Core.Customer). Now you've got a test assembly (MyApp.Test), a set of Customer tests (CustomerSpecs.cs or CustomerTests.cs or whatever) in a domain namespace (MyApp.Test.Domain). This is getting a little complicated, but no big deal from a resolution perspective. You'll just bring in the namespaces you need and bang (or BAM!).

However two things seem to arise out of this setup.

First (which might kick off it's own huge debate) you need access to your Customer class and potentially other classes, enumerations, etc. that it uses which are locked away in MyApp.Core.dll. That means you have two options. Either you make the Customer class public or you use the InternalsVisibleTo attribute to let MyApp.Test.dll see the stuff inside of MyApp.Core.dll. There's another option here, slam all the files into one assembly and don't worry about it from a testing perspective. That might alleviate the problem but that's a different blog entry.

The second thing that comes out of this is a fairly deep and wide namespace hierarchy in your test assembly. That might not be a big deal unto itself, but could be an inconvenience. In addition the deep impact this might cause, let's say you have 30 domain classes and the subsequent 30 or so fixtures (or more, or less, doesn't really matter). And these are scattered around in various folders. Each time you touch the fixture to write a test or look at the domain object and create some test, you're playing hunt and peck inside your test assembly to find the right spot to match the folder structure. Of course if you don't care and toss everything into a single folder you won't care, but I think that's a different type of maintenance you don't want to get into. Then, let's say you restructure your domain (which can happen a few times throughout a project) and now some of the classes relating to Customer move into some other place in the hierarchy in your domain. That's easy enough with ReSharper and a move like this is pretty low-maintenance. Except now your test folder structure doesn't match your logical domain structure (or folder structure for that matter).

Okay, that's the side of the conversation about issues that we've come to on using a separate test project. Now the positives on including your tests with the code you're testing.

- I don't need a separate Test project. There's a bit of debate in the blog-o-sphere around number of projects and what's right and what's too much so keeping things lean is good.

- I don't have to go hunting for a fixture in some hierarchy that may (or may not) be valid or the same as my domain. With ReSharper it's easy to find files/classes, but using a separate test project I have double maintenance to deal with. If I want to keep them in sync, it's more work.

- I don't have to expose my domain to my tests. As everything is in one project I can use OO principles and maintain encapsulation. When you create a new class, there's a damn good reason it's marked as internal and not public. If my entire domain is internal I can choose to expose what I need outside of the system/assembly as needed rather than "make it public so the test assembly can see it". True, there are tricks to expose MyApp.Core.dll to MyApp.Test.dll but they're hacks IMHO.

- I can leverage my unit testing framework in my runtime environment. This is probably the biggest advantage I see when I do something like create a Test folder under my Customer folder in my domain project. I can choose to ship my unit testing framework tools (MbUnit.Framework.dll or MbUnit.Gui.exe) with my system. This would be useful say in a QA or User Acceptance environment where I can run my tests against the real environment. This might not be something you want to do all the time, but I think it's good to have the option.

Here are some arguments I've heard for including your test code with production code that I'll address.

"If my tests are in my domain, I have a reliance on my unit test framework assemblies" - Yeah? So. If I wrap log4net I have a dependency on deploying log4net.dll as well. I'm not sure I see a disadvantage to this. There's been people saying they were "bitten" by this, but I'm sure what the bite is like or what the impact of that bite might be. Optionally, when we deploy we can decide to deploy our test code and it's dependencies as needed. Just because it's there doesn't mean it needs to go out the door. If we use our NAnt build scripts, we can not include the *Test* code and omit the MbUnit.Framework.dll files. Clean and lean.

"I want to see my tests and only my tests in one project and what I'm testing in another" - Again, not sure the advantage to this. If anything, keeping them together reduces the amount of "jumping" around you do in your IDE from this project to a test project, then back again. I'm not convinced or sure why you "want" to see tests in one project.

"Production code is production code and not test code!" - Not sure what this means, since I consider *all* code production code, tests, classes, etc. The ability to unit test my "production code" in a "production environment" rather than some simulation is a bonus for me.

All in all there's no clear cut answer here. What works for you works and I think the general mass keep tests in a separate project. I want to buck the norm here and for the next project we're going to try it out differently. I think there's advantages to it (and potentially disadvantages, like having to potentially clutter up my .Core assembly with a bunch of ObjectMother classes for example) but we'll see how it goes.

I don't like not trying something because "that's the way we always did it". Doesn't make it right. So give it a shot if you want, try it out, share your experience, or leave a comment that I'm a mad coder and putting my devs through unnecessary torture.

Like Phil said, this is not "a better way" or "the right" or "wrong" way to do things. I'm going with a Test folder under my aggregate classes in my domain and we'll how that goes. YMMV.

-

ALT.NET Seattle Participants

Registration for the ALT.NET event in Seattle (April 18-20) is closed so if you register you'll get put onto the waiting list (there are only a few people on it right now). However the participant list is simply amazing. It's like the who's who of the Agile software development world.

Phil Haack, Tom Opgenorth, Craig Beck, Kevin Hegg, Miguel Angel Saez, Dustin Campbell, Justin-Josef Angel, David Pokluda, Carlin Pohl, Matthew Podwysocki, Adam Dymitruk, Wendy Friedlander, Oliver, Chris Bilson, Jeff Certain, Dave Foley, Joe Pruitt, Jeff Tucker, Jeffrey Palermo, Anil Verma, Greg Banister, Chris Salahub, Jesse Johnston, Robert Ream, Jim Hugunin, Chantal Laplante, Owen Rogers, Mike Stockdale, Cameron Frederick, Dan Miser, Greg Sangha, Joey Beninghove, Jean-Paul S. Boodhoo, Ben Scheirman, D'Arcy Lussier, Chris Patterson, Ronald S Woan, Rob Reynolds, Adam Tybor, Eric Holton, Scott Hanselman, Gabriel Schenker, Wade Hatler, Arvind Palaniswamy, Weston Binford, Jonathan de Halleux, Joseph Hill, Matt Hinze, Dave Laribee, Nick Parker, Ray Houston, Steven "Doc" List, Jason Grundy, Brian Donahue, John Quach, Alex Hung, James Thigpen, Chris Sutton, Ian Cooper, Rajbeer Dhatt, John Teague, Eli Lopian, Eric Ness, Scott Allen, Aaron Jensen, Rustan Leino, Bil Simser, Rob Zelt, Jeff Brown, Phil Dennis, Tom Dean, Tim Barcz, Sean Solbak, David Pehrson, James Franco, Bryce Budd, Scott Guthrie, Jay Flowers, david p buchanan, Howard Dierking, David Airth, Jonathan Wanagel, Matt Pisut, Julie Poole, Jarod Ferguson, Jacob Lewallen, Rhys Campbell, Joe Ocampo, Brad Abrams, Russell Ball, Michael Bradley, Bertrand Le Roy, Simon Guest, Alvin Lee, khalil El haitami, Roy Osherove, Scott Koon, Charlie Poole, Pete McKinstry, Sergio Pereira, Brad Wilson, Piriya Thongtanunam, Neil Blake, Brian Henderson, Martin Salias, Grant Carpenter, Colin Jack, James Shore, Kirk Jackson, Rod Paddock, Alan Buck, John Nuechterlein, Rajiv Das, Jeremy D. Miller, Chris Ortman, Robert Smith, Kelly Leahy, Chris Sells, Dru Sellers, Robin Clowers, Terry Hughes, Ashwin ParthasarathyOsidosi, Drew Miller, Dennis Olano, Anand Raju Narayan, Glenn Block, Brandon Lang, Pete Coupland, Trevor Redfern, Ward Cunningham, Troy Gould, Don Demsak, Neil Bourgeois, John Lam, Donald Belcham, Phil MCmillan, Udi Dahan, Martin Fowler, James Kovacs, Ayende Rahien, Danieljakob Homan, Raymond Lewallen, Jeff Olson, Justice Gray, Douglas Schroeder, Justin Bozonier, Luke Foust, Michael Henderson, Shawn Wildermuth, Dave Woods, Chad Myers, Shane Bauer, Michael Nelson, Kyle Baley, Buchanan Dunn, Scott C Reynolds, Greg Young.

I wish I had a tag cloud for the names or something as so many people have so much influence on ALT.NET practices today. It's going to be an awesome get together!

-

Automated UI Testing with Project White

A co-worker turned me onto Project White, an automated UI testing framework by ThoughtWorks. This along the same lines as NUnitForms and other automated systems. It's basically Selenium for WinForms (which rocks in its own right) so I thought I would dig more into White (it has support for WPF as well, but I haven't tried that out yet). It was good timing as we've been talking and coming up with strategies for testing and UI testing is a big problem (and it is everywhere else based on people I've talked to).

A co-worker turned me onto Project White, an automated UI testing framework by ThoughtWorks. This along the same lines as NUnitForms and other automated systems. It's basically Selenium for WinForms (which rocks in its own right) so I thought I would dig more into White (it has support for WPF as well, but I haven't tried that out yet). It was good timing as we've been talking and coming up with strategies for testing and UI testing is a big problem (and it is everywhere else based on people I've talked to).The White library is nice and simple. All you really need to do is add in the Core.dll from White and your unit test framework and write some tests. I tested it with MbUnit but any framework seems to work. Ben Hall posted a blog entry about White along with some sample code. This, combined with the library got me started.

As Ben did, I created a simple application with a single form and started to write some tests. I couldn't use Ben's complete sample as it was written for VS2008 and I only had VS2005 for my testing. No problem. You can use White with VS2005, but you'll need the 3.0 framework installed.

I came across the intial problem with testing though. The first test that failed left the window up on the screen. This was an issue. I also wrote the same test Ben did, looking for a non-existant form, which appropriately threw a UIActionException. The test passed as it threw the exception I was looking for, but again the form was up on the screen. The Application.Kill() method wasn't being called if the test would fail or an exception was thrown. Ben's method was to put a call to Application.Kill in the [TearDown] method on the test fixture. This is great but I'm not a big fan of [SetUp] and [TearDown] methods. Another option was to surround each test with a try/catch/finally and in the finally code call out to the Application.Kill() method. This was ugly as I would have to do this on every test.

Following Ben's example I created a WhiteWrapper class which would handle the White library features for me. I made it implement IDisposable so I could do something like this:

1 using(WhiteWrapper wrapper = new WhiteWrapper(_path)) 2 { 3 ... 4 } 5

I also added a method to fetch me a control from the main window (using a Generic) so I could grab a control, execute a method on it (like .Click()) and check the result of another control (like a Label). Note that these are not WinForm controls but rather a White wrapper around them called UIItem. This provides general features for any control like a .Click method or a .Text property or items in a listbox.

Here's my WhiteWrapper code:

1 class WhiteWrapper : IDisposable 2 { 3 private readonly Application _host = null; 4 private readonly Window _mainWindow = null; 5 6 public WhiteWrapper(string path) 7 { 8 _host = Application.Launch(path); 9 } 10 11 public WhiteWrapper(string path, string mainWindowTitle) : this(path) 12 { 13 _mainWindow = GetWindow(mainWindowTitle); 14 } 15 16 public void Dispose() 17 { 18 if(_host != null) 19 _host.Kill(); 20 } 21 22 public Window GetWindow(string title) 23 { 24 return _host.GetWindow(title, InitializeOption.NoCache); 25 } 26 27 public TControl GetControl<TControl>(string controlName) where TControl : UIItem 28 { 29 return _mainWindow.Get<TControl>(controlName); 30 } 31 } 32

And here are the refactored tests to use the wrapper (implemented via a using statement which makes using the library fairly clean in my test code):

1 public class Form1Test 2 { 3 private readonly string _path = Path.Combine(Directory.GetCurrentDirectory(), "WhiteLibSpike.WinForm.exe"); 4 5 [Test] 6 public void ShouldDisplayMainForm() 7 { 8 using(WhiteWrapper wrapper = new WhiteWrapper(_path)) 9 { 10 Window win = wrapper.GetWindow("Form1"); 11 Assert.IsNotNull(win); 12 Assert.IsTrue(win.DisplayState == DisplayState.Restored); 13 } 14 } 15 16 [Test] 17 public void ShouldDisplayCorrectTitleForMainForm() 18 { 19 using (WhiteWrapper wrapper = new WhiteWrapper(_path)) 20 { 21 Window win = wrapper.GetWindow("Form1"); 22 Assert.AreEqual("Form1", win.Title); 23 } 24 } 25 26 [Test] 27 [ExpectedException(typeof(UIActionException))] 28 public void ShouldThrowExceptionIfInvalidFormCalled() 29 { 30 using (WhiteWrapper wrapper = new WhiteWrapper(_path)) 31 { 32 wrapper.GetWindow("Form99"); 33 } 34 } 35 36 [Test] 37 public void ShouldUpdateLabelWhenButtonIsClicked() 38 { 39 using (WhiteWrapper wrapper = new WhiteWrapper(_path, "Form1")) 40 { 41 Label label = wrapper.GetControl<Label>("label1"); 42 Button button = wrapper.GetControl<Button>("button1"); 43 button.Click(); 44 Assert.AreEqual("Hello World", label.Text); 45 } 46 } 47 48 [Test] 49 public void ShouldContainListOfItemsInDropDownOnLoadOfForm() 50 { 51 using (WhiteWrapper wrapper = new WhiteWrapper(_path, "Form1")) 52 { 53 ListBox listbox = wrapper.GetControl<ListBox>("listBox1"); 54 Assert.AreEqual(3, listbox.Items.Count); 55 Assert.AreEqual("Red", listbox.Items[0].Text); 56 Assert.AreEqual("Versus", listbox.Items[1].Text); 57 Assert.AreEqual("Blue", listbox.Items[2].Text); 58 } 59 } 60 }

The advantage I found here was handling exceptions and unknown states. For example in the last test, ShouldUpdateLabelWhenButtonIsClicked I ran the test before I even had the controls on the form. The test failed but it didn't hang or crash the system. That's what the IDisposable gave me, a nice way to always clean up without having to remember to create a [TearDown] method.

One of the philosophical questions we have to ask here is when is this kind of testing appropriate? For example, if I have good presenters I can test these kind of things with mocked out views and presenter/model tests. So am I duplicating effort here by testing the UI directly? Should I get my QA people to write these kind of tests? There's a long discussion to have in your organization around this so it's not just a "tool problem". You need to dig deep into what you're testing and how. At some point, you begin to divorce yourself from behaviour driven development and you end up testing UI edge cases and integration from a UI perspective. If your UI doesn't line up with your domain, how do you reconcile this? There are probably more questions than answers for this type of thing and software design is more art than science. The answer "it depends" goes a long way, but don't try to solve your business or design problems with a tool. There is no silver bullet here, just a few goodies to help you along the way. It's you who needs to decide what's appropriate for the situation and how much time, money, and resources you're going to invest in something.

The library works pretty good and I'm happy with the test code so far. We'll have to see now how it deals with far more complex UIs (we have things like crazy 40-column grids with all kinds of functionality). Back later on how that goes. In the meantime, check out Project White here on CodePlex to help you with your automated UI testing.

-

Registration for ALT.NET Open Spaces Seattle is alive!

Dave Laribee and team have done an excellent job of getting the next Seattle ALT.NET open space conference up and going. I'm pleased to say registration is open up now (and will probably fill up by the time I finish writing this blog entry). So get going and register now!

Dave Laribee and team have done an excellent job of getting the next Seattle ALT.NET open space conference up and going. I'm pleased to say registration is open up now (and will probably fill up by the time I finish writing this blog entry). So get going and register now!We've made things hopefully easier by incorporating OpenID so all you need is an OpenID identity (I use myopenid.com and it's quite good but any old one will do, including Yahoo) with your name and email set up (other information is optional). Note: Please do not use openid.org as it doesn't seem to work. We're not sure why and even if the openid.org site is real or a phishing site, so stick with myopenid.com or other provider.

In addition to the OpenID integration, we've negotiated a discount for the hotel nearby so that'll be available to you. As always, the event is free but like I said, it's limited to 100 participants. First come, first served.

Get going and see you in Seattle!