Building my new blog with Orchard – part 2: importing old contents

In the previous post, I installed Orchard onto my hosted IIS7 instance and created the “about” page.

In the previous post, I installed Orchard onto my hosted IIS7 instance and created the “about” page.

This time, I’m going to show how I imported existing contents into Orchard.

For my new blog, I didn’t want to start with a completely empty site and a lame “first post” entry. I did already have quite a few posts here and on Facebook that fit the spirit I wanted for the new blog so I decided to use that to seed it.

The science and opinion posts on Tales of the Evil Empire always seemed a little out of place (which some of my readers told me quite plainly), and the Facebook posts were blocked behind Facebook’s silo walls even though they were public. You still need a Facebook account to read those posts and search engine can’t go there as far as I know.

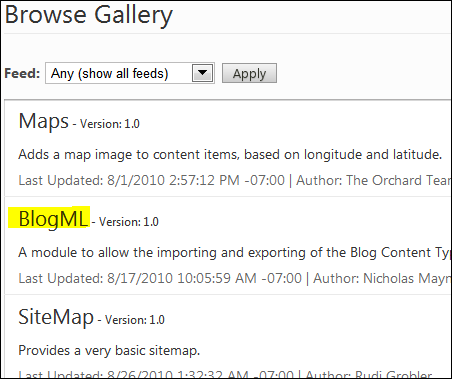

There is a BlogML import feature built by Nick Mayne that’s available from the Module gallery (right from the application as shown in the screenshot below), and I absolutely recommend you use it if you just need to import from an existing blog:

But well, I didn’t use Nick’s module. I wanted to play with commands, and I wanted to see what it would take to do a content import in the worst possible conditions or something close to that. Hopefully it can serve as a sample for other batch imports from all kinds of crazy sources.

Importing from Facebook was the most challenging because of the need to login before fetching the content. I chose to not even try to mess with APIs and to do screen scraping, which is not the cleanest but has the advantage of always being an option.

I also wanted to import post by post rather than in a big batch, to have really fine-grained control over what I bring over to the new place: I’m not moving, I’m splitting.

Before we dive into the code, I had to make a change in my local development machine. The production setup remains exactly what I described last time, but for my local dev efforts, the basic 0.5 release setup as downloaded from CodePlex is a little limiting.

To be clear, there is nothing you can’t do in Orchard with Notepad and IIS/WebMatrix, but if you have Visual Studio 2010, you’re going to feel a lot more comfortable.

The changes I applied were to clone the CodePlex repository locally, run the site, go through the setup process and then copy the Orchard.sdf file from my production site onto the local copy, in App_Data/Sites/Default. This way, I got a copy of the production site, data included, but could run, develop and debug from Visual Studio.

The first thing that I needed to do was to get my own private module. To do this, I like to use scaffolding from the command line. I open a Windows command-line and pointed it at the root of the local web site (src/Orchard.Web from the root of my enlistment) and typed “bin\orchard” to launch the Orchard command-line.

After enabling the scaffolding module (“feature enable Scaffolding”), I was able to use it to create the module: “scaffolding create module BlogPost.Import.Commands /IncludeInSolution:true”. The IncludeInSolution flag added the new module project to my local Orchard solution, which is really nice.

That done, I switched to Visual Studio, which prompted me to reload the solution because of the new project. After a few seconds, I was able to edit the manifest:

name: BlogPost.Import.Commands

antiforgery: enabled

author: Bertrand Le Roy

website: http://vulu.net

version: 0.5.0

orchardversion: 0.5.0

description: Import external blog posts

features:

BlogPost.Import.Commands:

Description: Import commands

Category: Content Publishing

Dependencies: Orchard.Blogs

The only thing I wanted in my module was the set of new commands, which I implemented in a new BlogPostImportCommands.cs file at the root of the new module.

As I said, I’m extracting the data through screen scraping. As I’m not insane, I’m using a library for that. I chose the HTML Agility Pack, which is a .NET HTML parser that comes with XPath. Point it at a remote document and query all you like.

In the class that will contain the commands, I needed a few dependencies injected:

private readonly IContentManager _contentManager; private readonly IMembershipService _membershipService; private readonly IBlogService _blogService; private readonly ITagService _tagService; private readonly ICommentService _commentService; protected virtual ISite CurrentSite

{ get; [UsedImplicitly] private set; }

The content manager, blog, tags and comment services will enable us to query and add to the site’s content. The membership service will enable us to get hold of user accounts, and the ISite will give us global settings such as the name of the super user.

Our import commands are going to need a URL and in the case of the Facebook import a login and a password.

[OrchardSwitch] public string Url { get; set; } [OrchardSwitch] public string Owner { get; set; } [OrchardSwitch] public string Login { get; set; } [OrchardSwitch] public string Password { get; set; }

We also have an owner switch but that is optional as the command will use the super-user if it is not specified:

var admin = _membershipService.GetUser(

String.IsNullOrEmpty(Owner) ?

CurrentSite.SuperUser : Owner);

The commands themselves must be marked with attributes that identify them as commands, provide a command name and help text, and optionally specify what switches they understand:

[CommandName("blogpost import facebook")] [CommandHelp("blogpost import facebook /Url:<url>

/Login:<email> /Password:<password>\r\n\t

Imports a remote FaceBook note, including comments")] [OrchardSwitches("Url,Login,Password")] public string ImportFaceBook() {

When importing from Facebook, the first thing we want to do is authenticate ourselves and store the results of that operation in a cookie context that we can then reuse for subsequent requests that are going to fetch actual note contents:

var html = new HtmlDocument(); var cookieContainer = new CookieContainer(); var request = (HttpWebRequest)WebRequest

.Create("https://m.facebook.com/login.php"); request.Method = "POST"; request.CookieContainer = cookieContainer; request.ContentType = "text/html"; var postData = new UTF8Encoding()

.GetBytes("email=" + Login + "&pass=" + Password); request.ContentLength = postData.Length; request.GetRequestStream()

.Write(postData, 0, postData.Length); request.GetResponse();

We can now bring the actual content:

request = (HttpWebRequest) WebRequest.Create(Url); request.CookieContainer = cookieContainer; html.Load( new StreamReader( request.GetResponse().GetResponseStream()));

Note that we are using the mobile version of the site here, just because the markup in there usually is considerably simplified and thus easier to query.

We can now create an Orchard blog post:

var post = _contentManager.New("BlogPost"); post.As<ICommonPart>().Owner = admin; post.As<ICommonPart>().Container = blog;

And then we can start setting properties from what we find in the HTML DOM:

var postText = html.DocumentNode

.SelectSingleNode(@"//div[@id=""body""]/div/div[2]")

.InnerHtml; post.As<BodyPart>().Text = postText;

Tags and comments are a little more challenging as they are lists. On Facebook, we can select all comment nodes:

var commentNodes = html.DocumentNode

.SelectNodes(@"//div[starts-with(@id,""comments"")]

/table/tr/td");

Then we can treat each comment node as a mini-DOM and create a comment for each:

var commentContext = new CreateCommentContext { CommentedOn = post.Id }; var commentText = commentNode

.SelectSingleNode(@"div[2]")

.InnerText;

commentContext.CommentText = commentText;

[...]

_contentManager.Create(commentPart);

This is a little more complicated than it should be, and eventually the need to go through a comment manager and an intermediary descriptor structure will go away.

Once the commands were done and working on my dev box (note that I had to enable the module by doing “feature enable BlogPost.Import.Commands” from the command line), I brought the database down from the production server and performed imports from the local command line (e.g. “blogpost import weblogs.asp.net /Url:http://weblogs.asp.net/bleroy/archive/2010/06/01/when-failure-is-a-feature.aspx”). I could have used the web version of the command-line directly on the production server but I didn’t because my confidence in the process was not 100% and I expected to encounter little quirks that would require fiddling with the code and the database on the fly. I was right.

Comments presented another challenge: in Orchard they are plain text, but what I had was full of links and HTML escape sequences. I decided to lose links on old comments but the escape sequences were really affecting readability, so I built a separate command to sanitize comment text throughout the Orchard content database.

So there you have it, this is the current state of my new site. I did some additional work after all that which is not exactly technical: I am currently in the process of replacing all photos and illustrations with black and white engravings and drawings. When I’m done with design work, I want the site to be black on white, resembling an old book as much as possible. I’m using lots of old illustrations such as those you can find on http://www.oldbookillustrations.com/ as well as in nineteenth century dictionaries and science books.

The code for the import commands can be downloaded from the following link:

http://weblogs.asp.net/blogs/bleroy/Samples/BlogPostImportCommands.zip

Part 1 of this series can be found here:

http://weblogs.asp.net/bleroy/archive/2010/08/27/building-my-new-blog-with-orchard-part-1.aspx