Contents tagged with SQL Server

-

Importing a large file into Sql Server database employing some statistics

I imported a 2.5 GB file into our SQL server today. What made this import challenging is SQL Server Import and Export Wizard failed to read the data. This file is a tab delimited file with CR/LF as record separator. The lines are truncated if the remaining line is empty, resulting variable number of columns in each record. Also, there is a memo field that contains CR/LF so that it is not reliable to use CR/LF to break the records.

I had to write my own import utility. The utility does the import in 2 phases: analysis and import phases. In the first phase, the utility reads the entire file to determine the number of columns, the data type of each column, its width and whether it allows null. In order to address the CR/LF problem, the utility uses the following algorithm:

Read a line to determine the number of columns in the line.

Peek the next line and determine the number of columns in the second line. If the sum of the two numbers is less than the expected column count, the second line is a candidate for merging into the first line. Repeat this step.

When determining the column type, I first initialize each column with a narrow type and then widen the column when necessary. I widen the columns in the following order: bool -> integral -> floating ->varchar. Because I am not completely sure that I merged the lines correctly, I relied on the probability rather than the first occurrence to widen the field. This allows me to run the analysis phase in only one pass. The drawback is that I do not have 100% confidence on the schema extracted; data that does not fix the schema would have to be rejected in the import phase. The analysis phase took about 10 minutes on a fairly slow (in today’s standard) dual-core Pentium machine.

In the import phase, I did line merging similar to the analysis phase. The only difference is that, with statistics from the first phase, I was able to determine whether a short line (i.e. a line that has a small number of columns) should merge with the previous line or the next line. The criterion is that the fields split from the merge line have to satisfy the schema. I imported over 8 million records and had to reject only 1 record. I visually inspected the reject record and the data is indeed bad. I used SqlBulkCopy to load the data in 1000 record batch. It took about 1 hour and 30 minutes to import the data over the wan into our SQL server in the cloud.

In conclusion, rather than determining the table schema fully deterministically, I employed a little bit of statistics in the process. I was able to determine the schema faster (with one pass) and higher fidelity (rejecting bogus data rather than accepting bogus data by widening schema). After all, statistics is an important part of machine learning today and it allows us to inject a little bit of intelligence into the ETL process.

-

Implementing set operations in TSQL

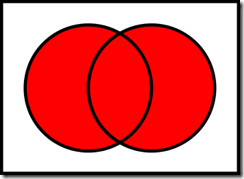

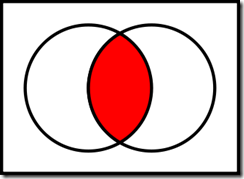

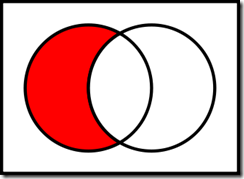

SQL excels at operating on dataset. In this post, I will discuss how to implement basic set operations in transact SQL (TSQL). The operations that I am going to discuss are union, intersection and complement (subtraction).

Union Intersection Complement (subtraction) Implementing set operations using union, intersect and except

We can use TSQL keywords union, intersect and except to implement set operations. Since we are in an election year, I will use voter records of propositions as an example. We create the following table and insert 6 records into the table.

Voters 1, 2, 3 and 4 voted for proposition 30 and voters 4 and 5 voted for proposition 31.

The following TSQL statement implements union using the union keyword. The union returns voters who voted for either proposition 30 or 31.

The following TSQL statement implements intersection using the intersect keyword. The intersection will return voters who voted only for both proposition 30 and 31.

The following TSQL statement implements complement using the except keyword. The complement will return voters who voted for proposition 30 but not 31.

Implementing set operations using join

An alternative way to implement set operation in TSQL is to use full outer join, inner join and left outer join.

The following TSQL statement implements union using full outer join.

The following TSQL statement implements intersection using inner join.

The following TSQL statement implements complement using left outer join.

Which one to choose?

To choose which technique to use, just keep two things in mind:

- The union, intersect and except technique treats an entire record as a member.

- The join technique allows the member to be specified in the “on” clause. However, it is necessary to use Coalesce function to project sets on the two sides of the join into a single set.

-

Upgrading database to SQL Server 2008

There are minor syntax changes in Transact-SQL that prevent applications written for earlier SQL Server versions from running in SQL Server 2008. It is possible to set the Compatibility Level of a database to support earlier versions of Transact-SQL. The following statement will alter the database to run at full SQL Server 2008 mode:

Alter Database Set COMPATIBILITY_LEVEL = 100

One of the typical problem is that older, non-ANSI style outer join using *= or =* does not work in SQL 2008. It is possible to do a quick assessment of offending queries in stored procedures, functions and views using the following SQL statements:

select r.ROUTINE_NAME from INFORMATION_SCHEMA.ROUTINES r where r.ROUTINE_DEFINITION like '%*=%' or r.ROUTINE_DEFINITION like '%=*%'

select v.TABLE_NAME from INFORMATION_SCHEMA.VIEWS v where v.VIEW_DEFINITION like '%*=%' or v.VIEW_DEFINITION like '%=*%'

-

Theory and Practice of Database and Data Analysis (4) – Capturing additional information on relations

So far in this series, I have been talking about traversing related data by navigating down the referential constraints. The physical database makes no distinction about the nature of the foreign key, whether it is parent-child relationship or another type of reference. In logical ER modeling, we often capture more information. So in this blog, I will explore the idea of capturing additional information so that we can build more powerful tools.

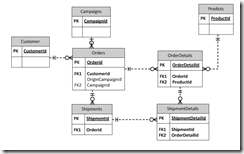

Let me further refrain myself by using the fictitious e-commerce company example introduced in the part 1 of this series: We have an Orders table to capture the order header and OrderDetails table to capture the line items. An order must be placed by a customer, and may or may not originates from marketing campaign. An order may be fulfilled by one or more shipments. Each shipment would ship order line items in part or in full. So we have an ER diagram likes the following:

In the ER diagram, there is a clear parent-child relationship between Orders and OrderDetails and between Shipdments and ShipmentDetails. If we send the data in an XML file, each OrderDetail element would be a child of an Order element and each ShipmentDetail element would be a child element of an Shipment element. However, it appears that ShipmentDetails table has two parents: Shipments and OrderDetails. We will pick the parent by the importance of the relationship so we will pick Shipments as the parent. We then need to establish an additional mechanism to store the cross references between ShipmentDetails and OrderDetails in our XML file.

Let us examine other relations. Customer is a mandatory attribute for the Order table and Product is a mandatory attribute to the OrderDetails. Campaign is an optional attribute of the Orders. We can further classify as Products as system table and Customers as data table.

So we have classified the relations in our simple example into several categories: parent-child, cross-reference, mandatory attribute to system table, mandatory attribute to data table and optional attribute.

Now let us use an example to see how such classification can help us. Supposing we are building a tool to export all data related to a transaction from our production database into an XML and then import into our development database. The ID of an element could change from one system to another. That is not a problem with parent-child as we will get a new key when we insert the parent and we can propagate that down to the children. However, we need to take extra care to ensure that the cross reference is maintained.

We do not have to worry about the system table but we do have to worry about the data table. Our development database may or may not have the same customer. So from our classification, we can generically determine that we need to get a copy of mandatory reference to a data table and its children to check for existence in the destination database.

Besides categorization, other information that we like to capture are one-to-one relationships, some of them can be inferred; if a referential constraint references to primary key in both tables, it has a one-to-one relationship. We also like to capture hidden relationship; they are real references but cannot be enforced by foreign key constraint in Sql Server. For example, some tables contain an ID column that can store multiple types of IDs; a separate column is used to indicate the type of ID stored in the ID column.

With human input of extra information beyond those we can extracted from physical databases, we shall be able to build better tools. I will explorer some tools in upcoming blogs of this series.

-

Theory and Practice of Database and Data Analysis (3) – Exhaustive Search by Traversing Table Relationships

In this post, I will discuss about building a script to find all the relevant data starting from a table name and primary key value. In the previous post, I have discussed how to a list of tables that references a table. From a parent table, we just need to get a list of child tables. We then loop through each child table to select records using the parent primary key value. If the child tables themselves have child tables, we repeat the same approach by recursively getting the grand children.

I often need to remote-desktop into clients system where I only have read privilege; I can neither create stored procedures nor execute them. So I am going to present a script that requires only read privilege.

In transact-SQL (T-SQL) batch, looping through records can be implemented using cursor or temp table. I used the later approach as temp table can grow so that I can also use it for recursion as well.

Recursion is a harder problem here. Recursion can be done in most of programming languages by calling procedures recursively. However, that is not possible here since I am restricted to a batch. In computer science, recursion can be implemented with a loop with a stack. In many programming languages, when a caller calls a callee, the program would save the current local data as well as the returning location of the caller into the stack. The area in the stack used by each call is called a stack frame. Once the callee returns, the program will restore the local data from the stack and resume from the previous location.

In my script, I use temp table #ref_contraints to accomplish both looping and recursion. referential constraints from the top table are added to the table as they are discovered and removed from the table when they are consumed (that is, no longer needed). I used the depth-first traversal in my script. With this structure, I can change to breath-first traversal with minimum efforts.

The #keyvalues temp table contains the primary key values for the table that I have already traversed. I can get the records from the child tables by joining to the key values in this table so that I only need to query each child table once for each foreign key relationship.

In order to make the script simpler, I eliminate the schema and assume all tables are under the schema “dbo”. This works with our database, and works with databases in Microsoft Dynamics CRM 2011.

I have also assumed that all primary keys contain only one integer column. This is true with out database. This is also true with Microsoft Dynamics CRM 2011 except you need to change integer id to guid.

I added comments the script so that one would know where the code would correspond to looping and procedure calling if the code is written in a language like VB.NET or C#. The comments also indicate the insertion points if additional code is needed.

In future posts of this series, I will discuss how to capture human intelligence to make search even more powerful.

-

Theory and Practice of Database and Data Analysis (2) – Navigating the table relationships

In the first part of the series, I discussed how to use the Sql Server meta data to find database objects. In this part, I will discuss how to find all the data related to a transaction by navigating the table relationships. Let us suppose that the transaction is a purchase order stored in a table called Orders. Any one that has seen the Northwind database knows that it has a child entity called OrderDetails. In even a modest real world database, the complexity can grow very fast. If we can ship a partial order and put the rest in back order, or we need to ship from multiple warehouses, we could have multiple Shipping records for each Order record and have each ShippingDetails record linked to an OrderDetails record. The customers can return the order, either in full or in part, with and without return authorization number, and the actually return may or may not match the return authorization. The system needs to be flexible enough to capture all the events related to the order. As you can see, a very modest order transaction could easily grow to a dozen of tables. If you are not familiar with the database, how can you find all the related table? If you need to delete a record, how do you delete it clean? If you need to copy a transaction from a production system to the development system, how do you copy the entire set of the data related to the transaction?

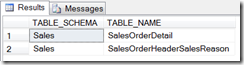

Fortunately, in any well-designed database, table relationships can be navigated through the foreign key relationships. In Sql server, we can usually find the information using the sp_help stored procedure. Here we will use INFORMATION_SCHEMA to obtain more refined results to be used in our tools. The TABLE_CONSTRAINTS view contains the primary and foreign keys on a table. The REFERENTIAL_CONSTRAINTS view contains the name of the foreign key on the many side and the name of the unique constraint on the one side. It is possible to navigate the table relationships using these two views. The following query will find all the tables that references the Sales.SalesOrderHeader table in the AdventureWorks database:

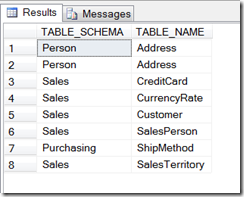

The following query will return all the tables referenced by the Sales.SalesOrderHeader table:

And the results:

The Person.Address table appeared twice because we have two columns referencing the table.

The CONSTRAINT_COLUMN_USAGE view contains the columns in the primary and the foreign keys. By using these 3 views, we can construct a simple tool that drills down into the data. In the next part of this series, we will construct such a tool.

Added by Li Chen 9/26/2011

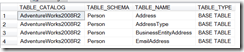

The KEY_COLUMN_USAGE view also contains the columns in the primary and the foreign keys. I prefer to use this view because it also contains the ORDINAL_POSITION that I can use to match the columns in the primary and the foreign keys when we have composite keys. The following example shows how to match the columns. The last line in the query eliminates the records from a table joining to itself.

-

Theory and Practice of Database and Data Analysis (1) – Searching for objects

Recently, I had to spend a significant portion of my time on production data support. Since we have a full-featured agency management system, I have to deal with parts of the application that I am not familiar with. Through the accumulated experiences and improved procedure, I was able to locate the problems with the increasing speed. In this series, I will try to document my experiences and approaches. In the first part, I will discuss how to search for objects.

Supposing we were told that there were problems in address data, the very first thing we need to do is to locate the table and the field that contains the data. Microsoft SQL Server has a set of sys* tables. It also supports ANSI style INFORMATION_SCHEMA. In order to reuse the knowledge discussed here to other database systems, I will try to use the INFORMATION_SCHEMA as much as possible. So to find any table that is related to “Address”, we can use the following query:

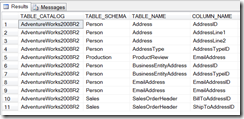

Using AdventureWorks 2008 sample database, it yields the following results:

If we suspect there are columns in other tables relating to address, we can search for the columns with the following query:

We will get the following results this time:

Supposing we need to find all the stored procedures and functions that reference a table, we can use sp_depends stored procedure. If we need to search thoroughly using a string, we can use the following query:

However, we cannot find triggers through Information_Schema. We have to use sysobjects:

Once we find the triggers, we can find the text using sp_helptext or query the syscomments table.

In the next part of the series, I will discuss navigating the table relationships.