Li Chen's Blog

-

Lessons from the ASP Classic Compiler project

I have not done much with my ASP Classic Compiler project for over a year now. The lack of additional work is due to both the economic and the technical reasons. I will try to document the reasons here both for myself and for would–be open source developers.

How the project got started?

I joint my current employer at the end of 2008. The company had a large amount of ASP classic code in its core product. The company was in talk with a major client on customization so that there was a period that the development team had fairly light load. I had a chance to put in a lot of thinking on how to convert the ASP classic code to ASP.NET. One of the ideas was to compile ASP Classic code into .net IL so that they can be executed within the ASP.NET runtime.

I do not have a formal education in computer science; my Ph.D. is in physics. I had been very fond of writing parsers by following the book “Writing Compilers & Interpreters” since 1996. I wrote a VBA like interpreter and a mini web-browser, all in Visual Basic, using the knowledge acquired. Nevertheless, a full-featured compiler is still a major undertaking. I spent several months of my spare-time working on the parser and compiler, and studied the theory behind. I was able to implement all the VBScript 3.0 features and the compiler was able to execute several Microsoft best-practice applications. The code runs about twice as fast as ASP Classic. I had lots of fun experimenting with ideas such as using StringBuilder for string concatenation to drastically improve the speed of rendering. I was awarded Microsoft ASP/ASP.NET MVP in 2010. In 2011, I donated the project to the community by opening the source code after consulting with my employer.

Since then, I faced challenges on two fronts: adding VBScript 5.0 support and solving a large number of compatibility issues in the real-world. After working for a few more months, I found it difficult to sustain the project.

Lesson 1: the economy and business issues

1. Sustainability of an open source project requires business arrangements. I am an employee. Technical activities outside the work are welcome as long as it benefits the employer. However, excess amount of activities not relating to the work could be a loyalty issue.

2. ASP Classic is a shrinking platform. So ASP Classic Compiler would not be a sound investment for most businesses except for Microsoft who has interest in bridging customers from older to newer platform painlessly.

Lesson 2: the technical issues

1. VBScript is a terrible language without a formal specification and test suites. The best “spec” outside of the users’ guide is probably Eric Lippert’s blog. It is not getting much maintenance and it is losing favor. As a result, few other developers with knowledge in compiler would have interest in VBScript.

2. Like the IronPython project and the Rhino projects, I started by writing a compiler. I was very shrilled that my first implementation without much optimization is about twice as fast as VBScript in my benchmark test. However, I had many obstacles on compatibility and adding the debug feature. If I would do it again, I would probably implement it as an interpreter with an incremental compiler. The interpreter would have a smaller start-up overhead. I can then have a background thread compiling the high usage code. The delayed compiling would allow me to employee more sophisticated data-flow analysis and construct larger call-site blocks. The current naïve implementation on DLR results in too many very small call-sites so that type-checking at call-sites is a significant overhead.

In summary, I had lots of fun with the project. I significantly improved my theoretical knowledge of algorithms in general, and of parsers and compilers. I had the honor of being awarded Microsoft ASP.NET/IIS MVP for the past 3 years and enjoyed my private copy of MSDN subscription at home. After a working prototype, the remaining work to take it to a production quality software is more a business problem than else. I am actively contemplating some new forward-looking ideas and wish I have some results to share soon.

-

ASP.NET and Open Source

I just came back from the Microsoft MVP Summit 2013. I was surprised and excited to learn there are a large number of open source projects from both inside and outside of Microsoft. There is also a strong support for open source frameworks in Visual Studio. I am glad to see that the Microsoft ASP.NET team has done a great job supporting open source and the community is going strong.

Open source projects from the ASP.NET team

Those who interested in the open source projects from the ASP.NET team should first visit http://aspnet.codeplex.com/. This site is a portal to many ASP.NET features that Microsoft has opened the source code.

Next, readers should visit http://aspnetwebstack.codeplex.com/. This site is the home of the latest source code of MVC, Web API and Web Pages.

The following are several open source projects that have been incorporated into Visual Studio:

- http://nuget.codeplex.com/: Nuget is now distributed with Visual Studio 2012.

- https://github.com/SignalR: SignalR is a framework for building long polling application by Damian Edwards and David Fowler. As of ASP.NET and Web Tools 2012.2, SignalR is part of ASP.NET and a Microsoft supported project. Don’t forget to change the namespace if you are using an earlier version.

The following projects are considered experimental:

- http://owin.org/: A specification of standard interface between .NET web servers and web applications.

- http://katanaproject.codeplex.com/: An implementation of OWIN.

Projects from outside of Microsoft

If you think there are no needs for another framework since ASP.NET MVC is already great, you would be surprised to find out some open source alternatives have actually attracted many followers:

- http://nancyfx.org/: Look at what they have built and number of contributors.

- http://mvc.fubu-project.org/: Another MVC framework that has a large number of contributors.

- The OWIN home page (http://owin.org/) has links to other projects.

Open Source Client-Side MVC or MVVM frameworks supported by Microsoft Visual Studio

Microsoft ASP.NET and Web Tools 2012.2 comes with a single page application (SPA) project template that uses KnockoutJS. However, Mads Kristensen also built projects templates for several other highly popular JavaScript SPA libraries and frameworks: Breeze, EmberJS, DurandalJS and Hot Towel. Visit http://www.asp.net/single-page-application/overview/templates for download links.

Don’t forget to install Mads’ Web Essentials extension for Visual Studio 2012. You will be pleasantly surprised by the number of features that this extension adds to Visual Studio 2012.

Final Notes

I gathered these links for myself and others. If I missed any links, please post a comment or send me a note. I will be glad to update this page.

-

Build a dual-monitor stand for under $20

Like many software developers, my primary computer is a laptop. At home, I like to connect it to an external monitor and use the laptop screen as the secondary monitor. I like to bring the laptop screen as close to my eye as possible. I got an idea when I visited IKEA with my wife yesterday.

IKEA carries a wide variety of legs and boards. I bought a box of four 4” legs for $10 (the website shows $14 but I got mine in the store for $10) and a 14” by 46” pine wood board for $7.50. After a simple assembly, I got my sturdy dual-monitor stand.

I place both my wireless keyboard and mouse partially underneath the stand. That allows me bring the laptop closer. The space underneath the stand can be used as temporary storage space. This simple idea worked pretty well for me.

-

Importing a large file into Sql Server database employing some statistics

I imported a 2.5 GB file into our SQL server today. What made this import challenging is SQL Server Import and Export Wizard failed to read the data. This file is a tab delimited file with CR/LF as record separator. The lines are truncated if the remaining line is empty, resulting variable number of columns in each record. Also, there is a memo field that contains CR/LF so that it is not reliable to use CR/LF to break the records.

I had to write my own import utility. The utility does the import in 2 phases: analysis and import phases. In the first phase, the utility reads the entire file to determine the number of columns, the data type of each column, its width and whether it allows null. In order to address the CR/LF problem, the utility uses the following algorithm:

Read a line to determine the number of columns in the line.

Peek the next line and determine the number of columns in the second line. If the sum of the two numbers is less than the expected column count, the second line is a candidate for merging into the first line. Repeat this step.

When determining the column type, I first initialize each column with a narrow type and then widen the column when necessary. I widen the columns in the following order: bool -> integral -> floating ->varchar. Because I am not completely sure that I merged the lines correctly, I relied on the probability rather than the first occurrence to widen the field. This allows me to run the analysis phase in only one pass. The drawback is that I do not have 100% confidence on the schema extracted; data that does not fix the schema would have to be rejected in the import phase. The analysis phase took about 10 minutes on a fairly slow (in today’s standard) dual-core Pentium machine.

In the import phase, I did line merging similar to the analysis phase. The only difference is that, with statistics from the first phase, I was able to determine whether a short line (i.e. a line that has a small number of columns) should merge with the previous line or the next line. The criterion is that the fields split from the merge line have to satisfy the schema. I imported over 8 million records and had to reject only 1 record. I visually inspected the reject record and the data is indeed bad. I used SqlBulkCopy to load the data in 1000 record batch. It took about 1 hour and 30 minutes to import the data over the wan into our SQL server in the cloud.

In conclusion, rather than determining the table schema fully deterministically, I employed a little bit of statistics in the process. I was able to determine the schema faster (with one pass) and higher fidelity (rejecting bogus data rather than accepting bogus data by widening schema). After all, statistics is an important part of machine learning today and it allows us to inject a little bit of intelligence into the ETL process.

-

While Lumia 900 is already good, Lumia 920 is even more fluid

I got my Lumia 900 in April, 2012. A few month later, I was disappointed when the news broke out that Lumia 900 would not be able to upgrade to Windows Phone 8. Luckily, AT&T allows early upgrade to Lumia 920 so that I can continue developing software for latest Windows Phone OS without waiting for my two year commitment to end.

Lumia 900 was known for excellent software optimization so that the phone felt very fast even with a single core processor. Now Lumia 920, with its Snapdragon S4 processor, feels blazingly fast. The most obvious improvement is acquiring GPS signal; it is almost instant now when I start an app that needs GPS.

Apart from being faster and more fluid, I also like the new tile size options that allows me to organize my start screen better. The new phone has a slightly larger display size (4.5” vs. 4.2”) though the physical dimension remains the same. The phone has a larger storage (32GB vs. 16GB) that allows me stored more free Nokia Drive offline maps on my phone so that I can use the GPS without using my monthly data plan.

So much for my first impression, I will tell more story when I start developing for Windows Phone 8.

-

Implementing set operations in TSQL

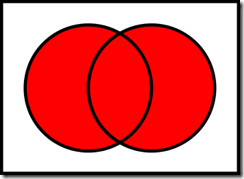

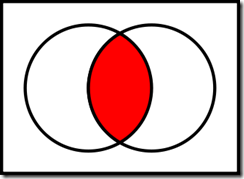

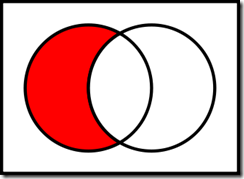

SQL excels at operating on dataset. In this post, I will discuss how to implement basic set operations in transact SQL (TSQL). The operations that I am going to discuss are union, intersection and complement (subtraction).

Union Intersection Complement (subtraction) Implementing set operations using union, intersect and except

We can use TSQL keywords union, intersect and except to implement set operations. Since we are in an election year, I will use voter records of propositions as an example. We create the following table and insert 6 records into the table.

Voters 1, 2, 3 and 4 voted for proposition 30 and voters 4 and 5 voted for proposition 31.

The following TSQL statement implements union using the union keyword. The union returns voters who voted for either proposition 30 or 31.

The following TSQL statement implements intersection using the intersect keyword. The intersection will return voters who voted only for both proposition 30 and 31.

The following TSQL statement implements complement using the except keyword. The complement will return voters who voted for proposition 30 but not 31.

Implementing set operations using join

An alternative way to implement set operation in TSQL is to use full outer join, inner join and left outer join.

The following TSQL statement implements union using full outer join.

The following TSQL statement implements intersection using inner join.

The following TSQL statement implements complement using left outer join.

Which one to choose?

To choose which technique to use, just keep two things in mind:

- The union, intersect and except technique treats an entire record as a member.

- The join technique allows the member to be specified in the “on” clause. However, it is necessary to use Coalesce function to project sets on the two sides of the join into a single set.

-

Gave two presentations at LA code camp today

I gave two presentation at LA code camp today. The following are the links to the presentation materials:

-

Upgrading to Windows 8

As MSDN members can download Windows 8 now, it is time for me to upgrade to Windows 8. Although I upgraded successfully, my journey is a long story. Hopefully my experience can save you some time.

- I had a 120GB SSD that has only 11GB free space left. I am not terribly comfortable with the limited space. So I ordered a 240GB SSD. I used Windows 7 to do an Windows image backup to an external drive. I then booted from the Windows 7 DVD and restored my image to the new drive. It took only 30 minutes to backup, but several hours to restore. After restoring, I went to Computer Management to expand my C drive to use the remaining free space on my new SSD.

- I then tried to download Windows 8 from MSDN. I am running Windows 7 Ultimate. I was not sure which edition of Windows 8 to download. According to this chart, I can upgrade from Windows 7 Ultimate to Windows 8 pro, but I can only find Windows 8 pro volume licensing edition in MSDN which I am not entitled to download. It turns our that both Windows 8 and Windows 8 pro uses the same media; the activation key decides which edition to activate.

- The Windows 8 upgrader asked me to uninstall Microsoft Security Essential. This is because Windows 8 has Windows Defender built in.

- The Windows 8 user interface is not entirely intuitive. Fortunately, there is a short video from Mike Halsey that allows one to get familiar quickly.

- After the upgrade, there is a message asking me to uninstall Virtual Clone Drive. This is because Windows 8 has a built-in feature to mount ISO and VHD files. This is a nice edition.

So much for my first day of experiments. See you next time.

-

Gave a presentation on c# dynamic and dynamic language runtime (DLR) at SoCal .NET User group meeting

You may download my presentation materials here.

New to my sample collection is using DynamicObject to construct a dynamic proxy. In contrast to a typical aspect-oriented-programming (AOP) framework with which you can only add cross-cutting concern to methods of an inheritable class or an interface, dynamic proxy does not have this limitation.

-

Applying algorithms to real-life problem

Today I encountered a real-life problem. My wife got some fresh pecan. After we cracked the pecan and took the large pieces, there are some smaller pieces of pecan and shell fragments left in the bowl. My wife asked kids to separate the pecan from the shell. There were three proposals:

1) Pick the pecan out. The trouble is that we have more pecan pieces than shell pieces. The time for this approach is proportional to number of pecan pieces.

2) Pick the shell out. It is not clear whether the time would be small because we still need to scan (with our eye) among pecans to find shell. In additional, it is hard to be sure that we are free of shells at the end.

3) Pour them in water and hope one is heavier than water and the other is lighter. This idea was never tested.

Then I proposed the 4th approach: grab a few pieces in my palm and apply 1) or 2), which ever way is faster. This works out really well.

The key is that after I scan the pecan and shells in my palm I never scan the same pieces again. The fast algorithms, whether searching a word in a string, or sorting a list, or finding the quickest time when traveling from point A to point B, are all by finding a smart way to minimize the operations on the same data.