“Try to avoid foreach/for loops”–Over my Dead Body!

Before I get into this a little bit, know that my comments are a direct response to this post: http://www.codekicks.com/2011/01/try-to-avoid-foreachfor-loops.html. The reason I’m writing this post is because the author is making invalid comparisons through his use of each of the looping mechanisms in C# to QUICKLY come to the conclusion that do-while and while are faster than for/foreach. I’ve done my own tests modeled after the author’s tests, but with significantly different results. As a computer scientist, I want to make sure I actually analyze the data before coming to a conclusion about the runtime behavior.

What He Got Wrong

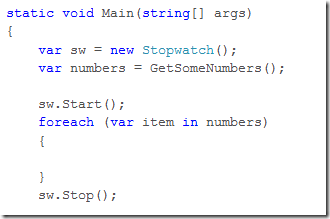

The first test he’s running is a foreach loop. This all fine and good… this is something we probably do on a daily basis.

What is the foreach loop actually doing? The compiler translates this into a local variable for the enumerator and a boolean. In IL, one of my runs produced a binary with the IEnumerator being named CS$5$0000. Then the compiler translates the body and the behavior of the foreach into a bunch of labels in IL. First MoveNext is called and the body of the forloop (now a label) is jumped to and executed. Then the MoveNext is called again and the loop starts over.

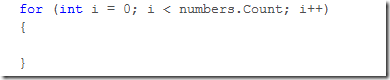

The next thing the author moves to is the for loop. Here’s his code:

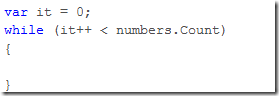

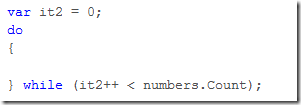

What this is actually doing is COMPLETELY different from the semantics of the forloop. What he’s doing is just running a for loop on a separate integer. He’s not running through the iterator. This is the thing that invalidates his tests. He has WAY too many experimental variables, leading to invalid comparisons. The same fallacy occurs in his while test and the do-while tests:

He’s not actually iterating through the list of numbers. This is a huge issue. You can’t possibly make valid comparisons when you completely change your test scheme. This is just bad test design.

The Right Idea

The idea of testing the different looping mechanisms isn’t a bad one. However, his test design doesn’t really take a few things into account that is really significant to the outcome of the time.

First of all, GC is a huge issue. He’s running all 4 tests in succession on the same thread. The GC in .NET, unlike the looping, can kick in whenever it damn well pleases to cleanup garbage. When you’re dealing with 10 million items, there’s bound to be at least 1 GC run in there somewhere, which can significantly mess with the times of the stopwatch. The stopwatch is a really dumb object… it just keeps track of CPU clicks. It has NO idea what the GC is up to.

A better design would be to simply run 1 trial at a time. That way, the order of the tests doesn’t influence the GC.

Wrong Data Structure

The author probably doesn’t know how lists are implemented (as I suspect most .NET devs don’t). If you’ve ever taken a class in C++ or C, you know that you have to manually resize arrays yourself if you’re going to have expandable array (“list”) behavior. The author chose to use a list to add 10 million items in it. The list, when setup, doesn’t know how many items you’ll be adding to the data structure. It choses an arbitrary size, allocates an array of that size, and expands if you add more items than the size it picked. But when it expands, it has to do a complete copy of the array EVERY SINGLE TIME an item is added (see the iternals of Insert on list). Since you already know the size of the data, just use an array to begin with!

Let’s Test This For Real

I’m done ranting about a poorly design perf test. It’s time to run my own. The proof is in the pudding.

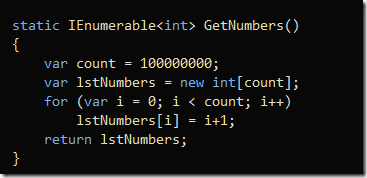

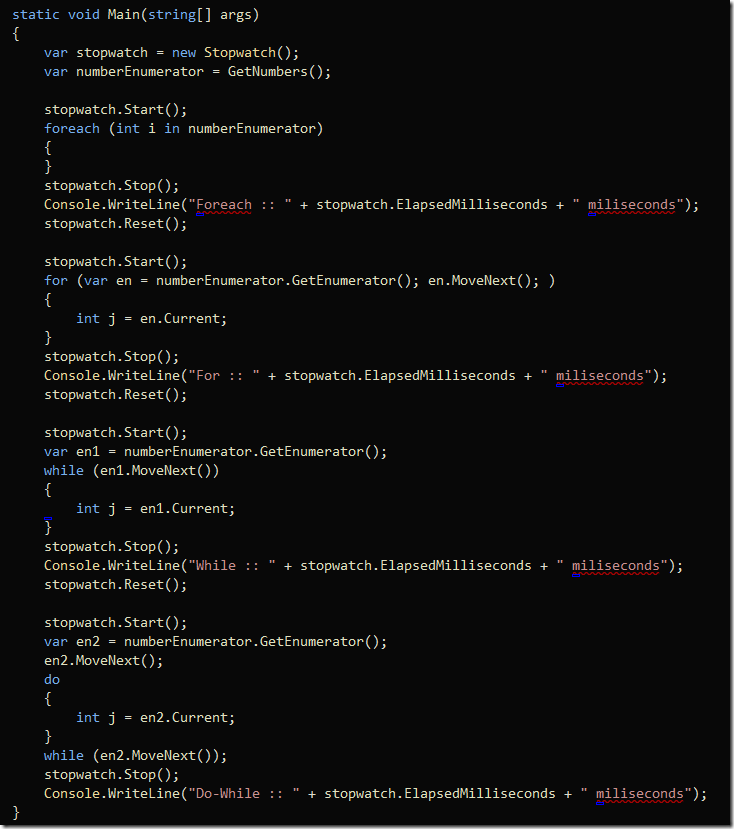

First I’m going to run identical tests in succession and let GC do whatever it wants. As you can see from my code, I’m using iterators EVERY TIME for consistency.

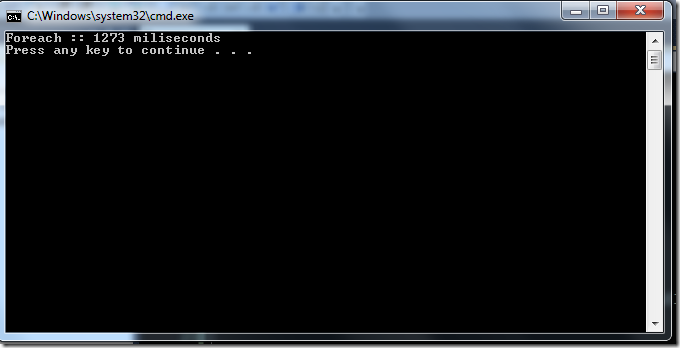

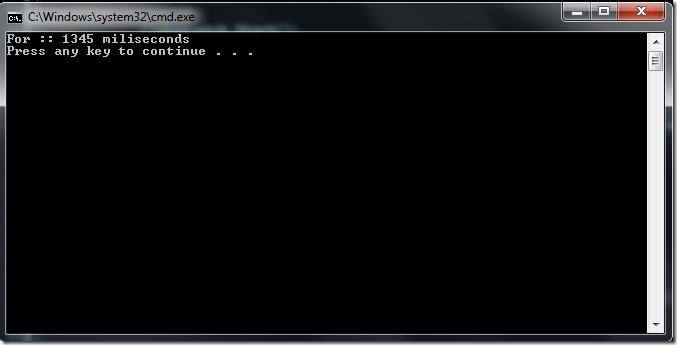

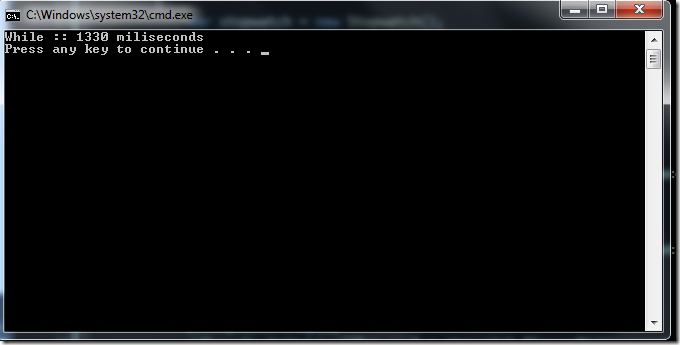

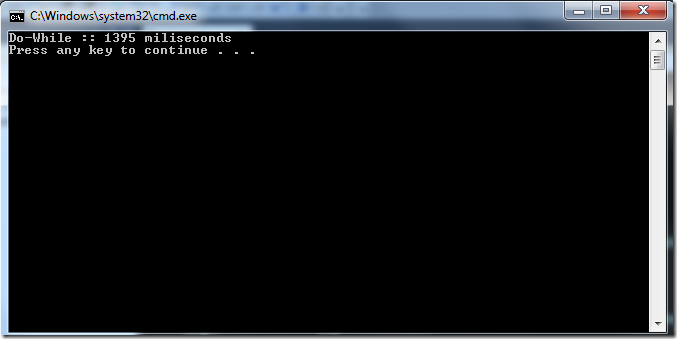

Surprising results: they’re all about the same.

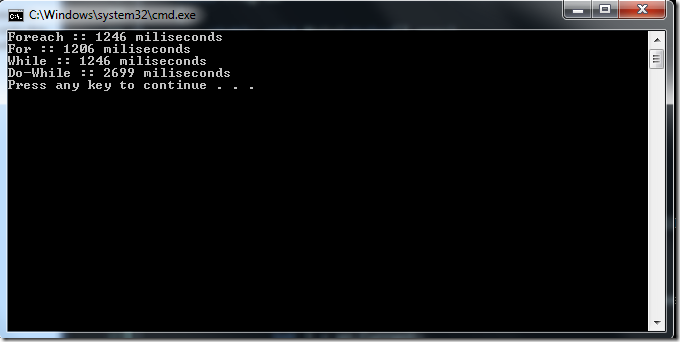

All except for that do-while… anyone wanna tell me why? My guess is that GC took over at some point inside the stopwatch execution. Let’s run it again.

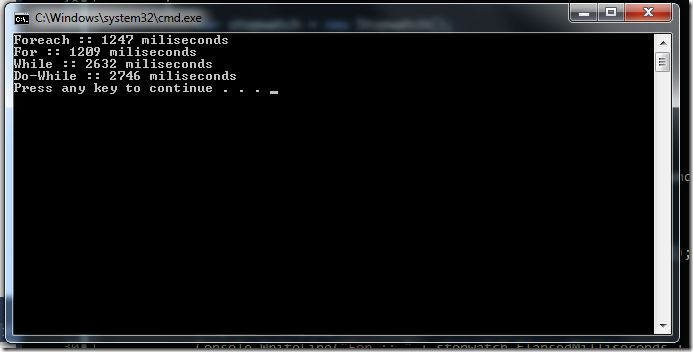

Hmmmmmmmmmm interesting! While and do-while are actually SIGNIFICANTLY SLOWER than for and foreach…. what an interesting turn of events. Let’s run them individually now.

All are around the same time, interestingly. Honestly, I think this is a testament to the great work the C# compiler guys do. Here’s the deal. At the end of the day, the body of any looping construct is a label generated by the compiler and then interpreted by the CLR. These guys shouldn’t have completely different running times.

Conclusion

This post started off as a complete rant about an incorrect test and ended with a proof that there’s a lot more going on in the test design than the original author was fooled into thinking. Let me just say that this post isn’t personal. I don’t even know the original author. I just happened on his post and about flew out of my chair. Just think about how this sounds: different looping constructs have different runtime behavior. This seems completely rediculous to me. If this were true, the C# compiler writers would certainly have converted all loops to the one that performed better… it wouldn’t be hard to do at all. However, this isn’t the case since all loops are turned into labels in IL.

So the moral of the story is that you can keep writing your for loops and foreach loops, there’s no performance gain either way.

UPDATE: The author changed the title from "Try to Avoid Foreach/For loops" to "An observation on .NET loops". I copied and pasted the original title for my blog post... so that's where the title comes from :)