Discover how to use machine learning for software estimation

How complex is a given software development task?

Let’s find out how to use machine learning for software estimation

In this work, we will present some ideas on how to build a smart component that is able to predict the complexity of a software development task. In particular, we will try to automate the process of sizing a task based on the information that is provided as part of its title and description and also leveraging the historical data of previous estimations.

In agile development, this technique is known as story points estimation and it defers from other classic estimation techniques that predict hours because the goal of this estimation is not to estimate how long a task will take, but

Typically, this estimation process is done by agile teams in order to know what all the tasks are that the team can commit to complete in the next sprint, generally a period of 2 weeks. Based on previous experience in the past 2 or 3 sprints, they can know in advance the average amount of points they are able to complete and so, they take that average as a measure of the threshold for the next sprint. Then, during the estimation process (generally through a fun activity like a planning poker), each member of the team gives a number of points that

There is a set of tasks that the team has decided to be the “base histories” that are well-known tasks already labeled with their associated complexity (that the team has agreed on) and can be used later as a base for comparison. After each sprint, new tasks can be added to this list. With time, this list can collect several examples of tasks with different complexities that the team can use later to compare and estimate new tasks. Teams generally reach a high level of accuracy in their estimations after some time, due to the continuous improvement based on accumulated experience, collecting more and more estimations.

Generally, the mental process that each team member goes through when estimating a new task is:

- Based on his/her previous experience, he/she looks for similar tasks that they have done in the past

- He/she gives the same number of points that similar tasks have been assigned in the past.

- If there isn’t a similar task, he/she starts an ordered comparison from the least to the most complex tasks. The reasoning is something like this: “Is this task more complex than these ones?” If so, he/she moves on with the set of well-known base tasks in order of increasing complexity. This process is repeated until the new task falls in one of the categories of complexity. By convention, in case a new task looks more complex than size X but less complex than size Y (Y being the next size in

order of complexity after X) the size assigned for the task’s estimation will be Y.

If we look at this process, we can find lots of similarities

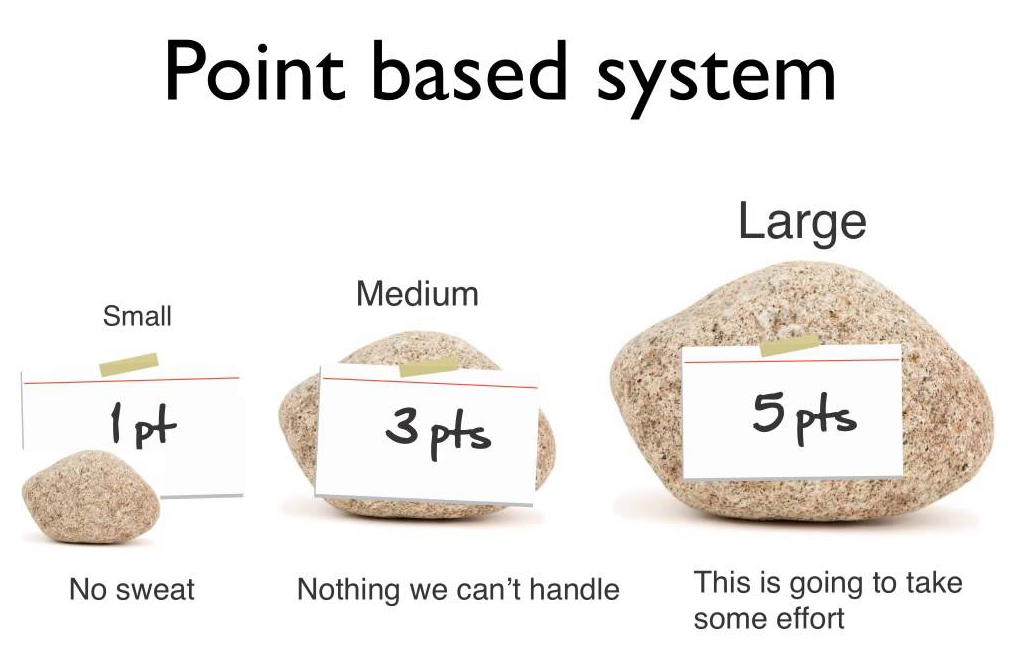

In the following, we present a machine learning approach to predict the complexity of a new task, based on the historical data of previously estimated tasks. We will use the Facebook FastText tool to learn text vector representations (word and sentence embeddings) that will be implicit used as input for a text classifier that classifies a task in three categories: easy, medium and complex. Note that we are changing things a bit, going from a point based estimation to a category based estimation.