Azure Functions to make audit queue and auditors happy

Using NServiceBus on Azure allows the best of two worlds – robust and reliable messaging framework on top of excellent Azure services. Azure services and any other cloud provider as well have strict capacity and quotas. For Azure transports NServiceBus is using, those are usually allowed maximum throughput, the total number of messages, the number of concurrent connections, etc. With Azure Storage Queues there’s an additional constraint that while is not significant on its own, does have a weight in a system: maximum message TTL is seven days only. Yes, yes, you’ve heard right. 7 days only. Almost as if someone at the storage team took the saying “a happy queue is an empty queue” and implemented maximum message TTL using that as a requirement. While it’s ok to have such a short message TTL for a message that is intended to be processed, for messages that are processed and need to be stored that could be an issue.

NServiceBus has feature to audit any successfully processed messages. For some projects audits are a must and having these audits is out of the question. The challenge is to have these audits kept in the audit queue created by NServiceBus. After seven days those messages are purged by the Azure Storage service. Ironically, this is the same service that can keep up to 500TB of blobs for as long as you need them, supporting various redundancy levels such as LRS, ZRS, and GRS. With LRS and ZRS there are three copies of the data. With GRS there are six copies of the data and data is replicated across multiple data centers. Heaven for audits.

If you’re not using Particular Platform, or Service Pulse specifically, you will have to build some mechanism to move your audit messages into a storage of some kind and keep it for whatever the retention period that is required. Building such an ETL service is not difficult, but it is an investment that requires investment, deployment, and maintenance. Ideally, it should be automated and scheduled. I’ll let your imagination complete the rest. Though for a cloud-based solution “when in Rome, do as the Romans do”.

One of the silently revolutionizing services in the Azure ecosystem is Azure Functions. While it sounds very simple and not as exciting as micro-services with Service Fabric or Containerization with Docker, it has managed to coin a buzz word of its own (serverless computing) and demonstrated that usages could be quite various. To a certain extent could be labeled as “nano-service”. But enough with this, back to audits.

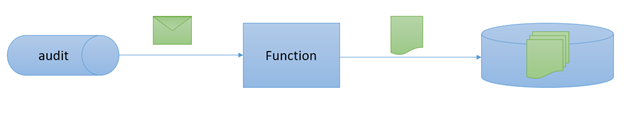

Azure Functions support various triggers and binding. Among those, you’ll find Storage Queues and Storage Blobs. If combined, they could help to build the following simple ETL:

Azure Functions supports two types of bindings: declarative and imperative. Let’s focus on the declarative one first.

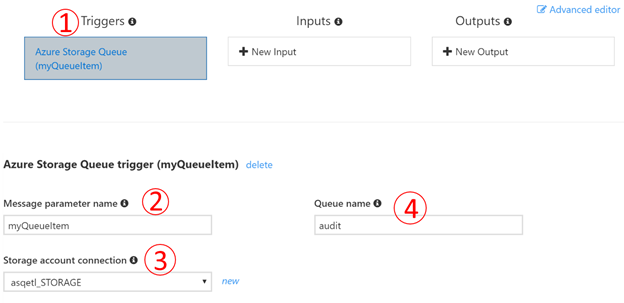

A declarative binding allows to specify a binding to a queue or an HTTP request and convert that into an object that a Function can consume. Using such a binding with an Azure Storage queue allows declaratively bind an incoming message to a variable in the list of function parameters rather than working with a raw CloudQueueMessage. It also allows getting some of the standard CloudQueueMessage attributes such as Id, DequeueCount, etc. Configuring a trigger using Azure Functions UI is incredibly easy.

- Create a new function that is triggered by a Storage queue

- Specify function parameter name that will be used in code (myQueueItem)

- Specify storage account to be used (settings key that represents storage account connection string)

- Specify queue to be monitored for messages

Once function created, you’ll have be able to replace its signature with an asynchronous version that will look like the following:

public static async Task Run(string myQueueItem, TraceWriter log)

That’s it for the input. This will allow the function to receive notifications about new messages found on a queue and receive the content as a string parameter. Additionally, we could add declarative bindings for the standard properties. For this sample, I’ll add Id of the ASQ message.

public static async Task Run(string myQueueItem, string id, TraceWriter log)

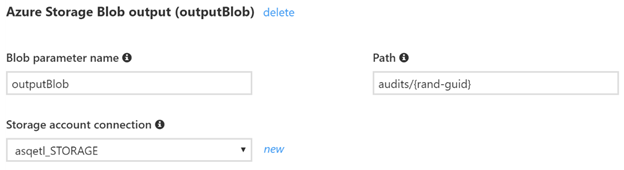

The objective is to turn the message into a blob file. This will require persisting the content to the storage account. A simple solution would be to specify the output declaratively by selecting an Azure Storage Blob as an output type and using a path with a unique {rand-guid} template (random GUID).

Using this approach, we’ll have all messages stored in the “audits” container with a random GUID as a file name. To have a bit friendlier audits, I’d like to perform the following:

- Partition audit messages based on the date (year-month-day)

- Partition audits based on the endpoint that processed the messages using NServiceBus audit information from within the message itself

- Store each audit message as JSON file with where filename is the original ASQ transport message ID Let’s see the code and analyze it step by step.

#r "Newtonsoft.Json"

using System;

using System.Text;

using System.IO;

using Newtonsoft.Json;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host.Bindings.Runtime;

static readonly string byteOrderMarkUtf8 = Encoding.UTF8.GetString(Encoding.UTF8.GetPreamble());

public static async Task Run(string myQueueItem, string id, Binder binder, TraceWriter log)

{

log.Info($"C# Queue trigger function triggered");

log.Info($"Original ASQ message ID: {id}");

var value =myQueueItem.StartsWith(byteOrderMarkUtf8) ? (myQueueItem).Remove(0, byteOrderMarkUtf8.Length) : myQueueItem;

var obj = JsonConvert.DeserializeObject<MessageWrapper>(value);

var endpointName = obj.Headers["NServiceBus.ProcessingEndpoint"];

var attributes = new Attribute[]

{

new BlobAttribute($"audits/{DateTime.UtcNow.ToString("yyyy-MM-dd")}/{endpointName}/{id}.json"),

new StorageAccountAttribute("asqetl_STORAGE")

};

using (var writer = await binder.BindAsync<TextWriter>(attributes).ConfigureAwait(false))

{

writer.Write(myQueueItem);

}

log.Info($"Done ");

}

public class MessageWrapper

{

public string IdForCorrelation { get; set; }

public string Id { get; set; }

public int MessageIntent { get; set; }

public string ReplyToAddress { get; set; }

public string TimeToBeReceived { get; set; }

public Dictionary<string, string> Headers { get; set; }

public string Body { get; set; }

public string CorrelationId { get; set; }

public bool Recoverable { get; set; }

}

- I’ve modified the signature to inject a Binder into the method. Binder allows imperative bindings to be performed at run-time. In this case, specifying the output blob.

- NServiceBus ASQ transport is encoding messages with a BOM (Byte Order Mark). Declared byteOrderMarkUtf8 variable is used to strip it out to persist message as raw JSON.

- MessageWrapper class represents the message wrapper used by NServiceBus ASQ transport. Since native Storage Queues messages do not have headers, MessageWrapper is used to contain both headers and the payload. "NServiceBus.ProcessingEndpoint" header will provide the information at what endpoint a given message was successfully processed.

- Once we have all the prerequisites, the “black magic” can start. This is where Binder is used to providing the underlying WebJobs SDK information where the blob should be created. To provide these hints, we need to instantiate two attributes: BlobAttribute and StorageAccountAttribute and supply those to the Binder.BindAsync method. The first attribute, BlobAttribute, is specifying the path of the blob to use. The second attribute, StorageAccountAttribute, is determining the settings key to be used to retrieve storage connection string. Note that w/o StorageAccountAttribute account the default (AzureWebJobsStorage) setting key is used. That’s the storage account used to create the function in the portal.

- Passing the attributes to the BindAsync method to get a writer and writing the message content into the blob is finishing is the final step. After that content of the queue message will be stored as a blob with the desired name at the path represented by “audits/year-month-day/endpoint/original-asq-message-id.json”.

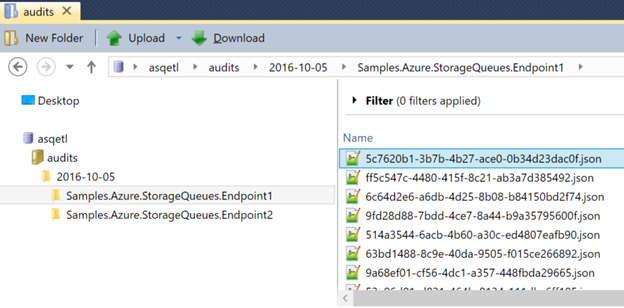

To validate the function is working, one of the NServiceBus ASQ transport samples can be used. Configure the sample to use the same storage account and execute it. Endpoint1 and Endpoint2 will process messages, but not emit any audits. To enable audits, the following configuration modification is required in Program.cs for each endpoint:

endpointConfiguration.AuditProcessedMessagesTo("audit");

Once auditing is enabled, blob storage will start feeling up with any new audit messages emitted by the endpoints.

The function will be running from now on and convert audit messages into blobs. Endpoints can be added or removed; the function will adopt itself and emit files in the appropriate location. In a few lines of code, we made both, the audit queue and the auditors happy.

Update: quitely, Storage team has enabled unlimitted TTL