Website performance – know what’s coming

If you live in Australia (and perhaps even outside of Oz), there has been a lot of attention on the Australian Bureau of Statistics (ABS) in regards to the Census 2016 website and its subsequent failure to adequately handle the peak usage times it was supposedly designed for. It was also reported that a Denial of Service attack was launched against the site in order to cause an outage, which obviously worked. One can argue where the fault lay, and no doubt there are blame games being played out right now.

IBM were tasked with the delivery of the website and they used a company called "RevolutionIT" to perform load testing. It is my opinion that while RevolutionIT performed the load testing, IBM is indeed the one who should wear all the blame. Load testing and performance testing simply provide metrics for a system under given situations. IBM would need to analyse those metrics to ensure that aspects of the system are performing as expected. This is not just a one off task either. Ideally it should be run repeatedly to ensure changes being applied are having the desired effect.

Typically, a load test run would be executed against a single instance of the website to ascertain the baseline performance for a single unit of infrastructure, with a "close to production version" of the data store it was using.

Once this single unit of measure is found, it is a relatively easy exercise to extrapolate how much infrastructure is required to handle a given load. More testing can then be performed with more machines to validate this claim. This a very simplistic view of the situation and there is far more variables to consider but in essence, baseline your performance then iterate upon that.

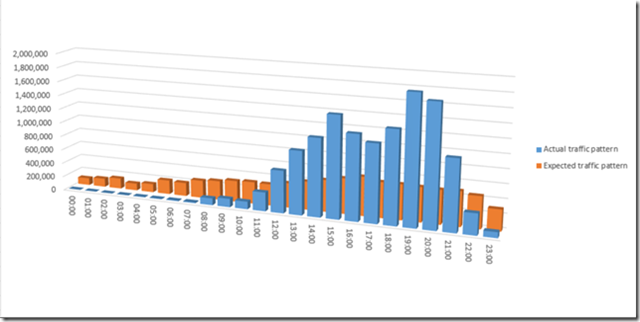

Infrastructure is just one part of that picture though. A very important one of course, but not the only one. Care must be taken to ensure the application is designed and architected in such a way to make it resilient to failure, in addition to performing well. Take a look at the graph below.

Note that this is not actual traffic on census night but rather my interpretation of what may have been a factor. The orange bars represent what traffic was expected during that day, with the blue representing what traffic actually occurred on the day. Again, purely fictional in terms of actual values but not too far from what probably occurred.

At popular times of the day convenient for the Australian public, people tried to access the site and fill it in.

A naïve expectation is to think that people will be nice net citizens and plod along, happy to evenly distribute themselves across the day, with mild peaks. A more realistic expectation is akin to driving in heavy traffic. People don’t want to go slower and play nice, they want to go faster. See a gap in the traffic? Zoom in, cut others off and speed down the road to your advantage. This is the same as hitting F5 in your browser attempting to get the site to load. Going too slowly, hit F5 again and again. Your problem is now even worse than estimated expectations as each person can triple their requests attempting to be made.

To avoid these situations, that you need to have a good understanding of your customers usage habits. Know or learn the typical habits of your customers to ensure you get a clear view of how your system will be used. Will it be used first thing in the morning while people are drinking their first coffee? Maybe there will be multiple peak times during morning, lunch and the evening? Perhaps the system will remain relatively unused for most days except friday to sunday?

In a performance testing scenario, especially in a situation similar to Census where you know in advance you are going to get a lot of sustained traffic at particular times, you need to plan for the worst. If you have some degree of metrics around what the load might be, ensure your systems can handle far more than expected. At the very least, ensure that should you encounter unexpected or extremely heavy traffic, your systems can scale, and can fail with grace. This means that if your system cannot cope, it can at least display some form of information to the user, in addition to resuming service once the system can cope with the load.

Again, infrastructure plays an important part here, but this can all be for naught if you do not design and architect for scale. At least some positive out of the census issues is that this kind of design will hopefully be thought about the next time such a system is made available. With the aftermath still quite raw, now is a great time to bring to attention the performance needs in the systems that you manage or design, to ensure that this kind of consideration is brought to the attention of those that can do something about it.

Sources:

http://www.crn.com.au/news/revolution-it-census-fail-was-not-our-fault-433913

http://risky.biz/censusfailupdate

http://www.abs.gov.au/AUSSTATS/abs@.nsf/mediareleasesbyReleaseDate/5239447C98B47FD0CA25800B00191B1A?OpenDocument

http://www.lifehacker.com.au/2016/08/ibm-and-the-abs-census-let-the-blame-games-begin/