When the Google beats on your SignalR

Around the end of April, I put v11 of POP Forums into production on CoasterBuzz. Probably the biggest feature of that release was all of the new real-time stuff in the forum, with new posts appearing before your eyes and in the topic lists and such. This was all enabled in part by SignalR, the framework that allows for bidirectional communication between the browser and the server over an open connection (or simulated open connection, depending on the browser).

It didn't take long before I noticed some odd exceptions being thrown in the error logs around the SignalR endpoints. They were all coming from Googlebot, which apparently scans Javascript and looks for ways to scan content that's ordinarily loaded dynamically into your site's pages. Yay for Google trying to find content on your site, but in this case, there are two big problems.

The first problem is that Googlebot appears to be somewhat stupid. While it identifies the endpoint, the actual URL that SignalR uses, it seems to have no regard as to what data has to be posted to it. That's where the exceptions come from, because SignalR doesn't understand the request.

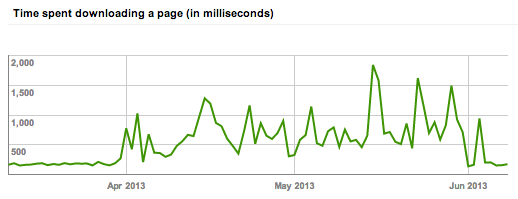

The second problem is that Googlebot understandably expects to get a response and move along. But SignalR likes to keep an open connection so that the client and server bits can talk to each other. That's kind of the whole point. I didn't catch this issue until I used Google Webmaster Tools to see what my load times were looking like. You can very plainly see where I started using SignalR, and when I fixed the problem. Google was hanging on for as long as two seconds.

The reason I looked is because Google was being relentless at one point, banging on the thing hard enough to generate hundreds of exceptions every hour. The fix was easy enough, just put a few lines in your robots.txt file that tell the Google to back off:

User-agent: *

Disallow: /signalr/

The more you know, the smarter you grow.