Contents tagged with General Software Development

-

Nothin but .NET course - Tips and Tricks - Day 1

I'm currently popping in and out of Jean-Paul Boodhoo's Nothin but .NET course for the week and having a blast. We brought JP in-house to the company I'm at right now for his .NET training course, which is arguably probably the best .NET course out there. Period.

I don't claim to be an expert (at least I hope I've never claimed that) and I'm always looking for ways to improve myself and add new things to my gray matter that I can hopefully recall and use later. Call them best practices, call them tips and tricks, call them rules to live by. Over the course of the week I'll be blogging each day about what's going on so you can live vicariously through me (but you really need to go on the course to soak in all of JP and his .NET goodness).

So here with go with the first round of tips:

File -> New -> Visual Studio Solution

This is a cool thing that I wasn't aware of (and makes me one step closer to not using the mouse, which is a good thing). First, we have a basic empty Visual Studio .sln file. This is our template for new solutions. Then create new registry file (VisualStudioHack.reg or whatever you want to call it) with these contents:

Windows Registry Editor Version 5.00

[HKEY_CLASSES_ROOT\.sln\ShellNew]

"FileName"="Visual Studio Solution.sln"Double-click the .reg file and merge the changes into your registry. The result is that you now will have a new option in your File -> New menu called "Microsoft Visual Studio Solution". This is based on your template (the empty .sln file) that you provide so you can put whatever you want in here, but it's best to just snag the empty Visual Studio Solution template that comes with Visual Studio. Very handy when you just need a new solution to start with and don't want to start up Visual Studio and navigate through all the menus to do this.

MbUnit

MbUnit rocks over NUnit. My first exposure to the row test and while you can abuse the feature, it really helps cut down writing a ton of tests or (worse) one test with a lot of entries.

In NUnit let's say I have a test like this:

35 [Test]

36 public void ShouldBeAbleToAddTwoPositiveNumbers()

37 {

38 int firstNumber = 2;

39 int secondNumber = 2;

40 Calculator calculator = new Calculator();

41 Assert.AreEqual(firstNumber + secondNumber, calculator.Add(firstNumber, secondNumber));

42 }

And I need to test boundary conditions (a typical thing). So I've got two options. First option is to write one test per condition. The second is to write a single test with multiple asserts. Neither is really appealing. Having lots of little tests is nice, but a bit of a bear to maintain. Having a single test with lots of asserts means I have to re-organize my test (hard coding values) and do something like this in order to tell which test failed:

33 [Test]

34 public void ShouldBeAbleToAddTwoPositiveNumbers()

35 {

36 Calculator calculator = new Calculator();

37 Assert.AreEqual(2 + 2, calculator.Add(2, 2), "could not add positive numbers");

38 Assert.AreEqual(10 + -10, calculator.Add(10, -10), "could not add negative number");

39 Assert.AreEqual(123432 + 374234, calculator.Add(123432, 374234), "could not add large numbers");

40 }

Or something like, but you get the idea. Tests become ugly looking and they feel oogly to maintain. Enter MbUnit and the RowTest. The test above becomes parameterized that looks like this:

28 [RowTest]

29 public void ShouldBeAbleToAddTwoPositiveNumbers(int firstNumber, int secondNumber)

30 {

31 Assert.AreEqual(firstNumber + secondNumber, calculator.Add(firstNumber, secondNumber));

32 }

Now I can simply add more Row attributes, passing in the various boundary conditions I want to check like so:

23 [Row(2,2)]

24 [Row(10, -10)]

25 [Row(123432, 374234)]

26 [RowTest]

27 public void ShouldBeAbleToAddTwoPositiveNumbers(int firstNumber, int secondNumber)

28 {

29 Assert.AreEqual(firstNumber + secondNumber, calculator.Add(firstNumber, secondNumber));

30 }

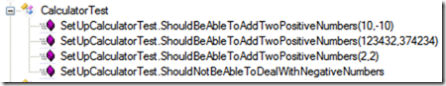

That's cool (and almost enough to convince me to switch) but what's uber cool about this? In the MbUnit GUI runner, it actually looks like separate tests and if a Row fails on me, I know exactly what it was. It's a failing test out of a set rather than a single test with one line out of many asserts.

As with any tool, you can abuse this so don't go overboard. I think for boundary conditions and anywhere your tests begin to look crazy, this is an awesome option. I haven't even scratched the surface with MbUnit and it's database integration (I know, databases in unit tests?) but at some point you have to do integration testing. What better way to do it than with unit tests. More on that in another blog.

Subject Under Test

This term was coined (at least my first exposure to it) was from Gerard Meszaros excellent book xUnit Testing Patterns and he uses it throughout. It makes sense as any unit test is going to be testing a subject so therefore we call it the Subject Under Test. One nomenclature that JP is using in his tests is this:

15 private ICalculator calculator;

16

17 [SetUp]

18 public void SetUpCalculatorTest()

19 {

20 calculator = CreateSUT();

21 }

22

23 private ICalculator CreateSUT()

24 {

25 return new Calculator();

26 }

So basically every unit test has a method called CreateSUT() (where appropriate) which creates an object of whatever type you need and returns it. I'm not sure this replaces the ObjectMother pattern that I've been using (and it's really not a pattern but more of a naming convention) but again, it's simple and easy to read. A nice little tidbit you pick up.

In doing this, my mad ReSharper skills got the best of me. Normally I would start with the CreateSUT method, which in this case returns an ICalculator by instantiating a new Calculator class. Of course there's no classes or interfaces by this name so there's two options here. One is to write your test and worry about the creation later, the other is to quickly create the implemenation. At some point you're going to have to create it anyways in order to compile, but I like to leave that until the last step.

Under ReSharper 2.x you could write your test line by writing CreateSUT() then press CTRL+ALT+V (introduce variable). However since ReSharper 2.5 (and it's still there in 3.x) you can't do this. ReSharper can't create the ICalculator instance (in memory) in order to walk through the method table (which would give you intellisense). So the simple thing is to write the CreateSUT() method and just whack ALT+ENTER a few times to create the class and interface.

Thread safe initialization using a delegate

I have to say that I never looked into how to initialize event handlers in a thread-safe way. I've never had to do it in the past (I don't work with events and delegates a lot) but this is a great tip. If you need to initialize an event handler but do it in a thread-safe way here you go:

private EventHandler<CustomTimerElapsedEventArgs> subscribers = delegate { };

It's the simple things in life that give me a warm and fuzzy each day.

Event Aggregators and Declarative Events

We spent the better part of the day looking at events, delegates, aggregators and whatnot. This is apparently new to the course that he's just added and hey, that's what Agile is about. Adapting to change.

Anyways, as we dug into it I realized how little I knew about events and how dependent I was on the framework to help me with those object.EventName += ... snippets I would always write. Oh how wrong that was as we got into loosely coupled event handlers (all done without dynamic proxies, which comes later). It's pretty slick as you can completely decouple your handlers and this is a good thing. For example, here's a simple test that just creates an aggregator, registers a subscriber, and checks to see if the event fired.

First the test:

25 [Test]

26 public void ShouldBeAbleToRegisterASubscriberToANamedEvent()

27 {

28 IEventAggregator aggregator = CreateSUT();

29 aggregator.RegisterSubscriber<EventArgs>("SomethingHappened", SomeHandler);

30 eventHandlerList["SomethingHappened"].DynamicInvoke(this, EventArgs.Empty);

31 Assert.IsTrue(eventWasFired);

32 }

Here's the delegate that will handle firing the event:

58 private void SomeHandler(object sender, EventArgs e)

59 {

60 eventWasFired = true;

61 }

And here's part of the aggregator class which simply manages subscribers in an EventHandlerList:

7 public class EventAggregator : IEventAggregator

8 {

9 private EventHandlerList allSubscribers;

10

11 public EventAggregator(EventHandlerList allSubscribers)

12 {

13 this.allSubscribers = allSubscribers;

14 }

15

16 public void RegisterSubscriber<ArgType>(string eventName, EventHandler<ArgType> handler)

17 where ArgType : EventArgs

18 {

19 allSubscribers.AddHandler(eventName, handler);

20 }

21

22 public void RaiseEvent<ArgType>(string eventName, object sender, ArgType empty) where ArgType : EventArgs

23 {

24 EventHandler<ArgType> handler = (EventHandler<ArgType>) allSubscribers[eventName];

25 handler(sender, empty);

26 }

27

28 public void RegisterEventOnObject<ArgType>(string globalEventName, object source, string objectEventName)

29 where ArgType : EventArgs

30 {

31 }

32 }

Again, basic stuff but lets you decouple your events and handlers so they don't have intimate knowledge of each other and this is a good thing.

A very good thing.

BTW, as we extended this we sort of built CAB-like functionality on the first day and in a few hours (only event publishers and subscribers though). We just added two attribute classes to handle publishers and subscribers (rather than subscribing explicitly). It wasn't anywhere near as complete as Jeremy Miller's excellent Build your own CAB series, but none-the-less it was a simple approach to a common problem and for my puny brain, I like simple.

It was fun and appropriate as the team is using CAB so the guys in the room are probably more positioned to understand CAB's EventBroker now.

Other Goodies and Tidbits

JP uses a nifty macro (that I think I'll abscond and try out myself) where he types the test name in plain English but with spaces like so:

Should be able to respond to events from framework timer

Then he highlights the text and runs the macro which replaces all spaces with underscores:

Should_be_able_to_respond_to_events_from_framework_timer

I'm indifferent about underscores in method names and it's a no-no in production code, but for test code I think I can live with it. I reads well and looks good on the screen. Using a little scripting, reflection, or maybe NDepend I could spit out a nice HTML page (reversing the underscores back to spaces) and form complete sentences on my test names. This can be a good device to use with business users or other developers as you're trying to specify intent for the tests. I have to admit it beats the heck out of this:

ShouldBeAbleToRespondToEventsFromFrameworkTimer

Doesn't it?

Wrap up

The first day has been a whirlwind for the team as they're just soaking everything in and making sense of it. For me, I'm focusing on using my keyboard more and getting more ReSharper shortcuts embedded into my skull. I've now got MbUnit to go plow through, check out the other fixtures and see what makes it tick and take the plunge from NUnit if it makes sense (which I think it does as I can still use my beloved TestDriven.NET with MbUnit as well!).

More Nothin

JP is doing this 5-day intensive course that stretches people to think outside the box of how they typically program and delve into realms they have potentially not event thought about yet, about one per month. For the next little while (he's a busy dude) he's going to be travelin' dude. In October he'll be in New York City. In September he'll be in London, England and other locations are booked up into the new year.

If you want to find out more details about the course itself, check out the details here and be sure to get on board with the master.

-

Efficiency vs. Effectiveness, the CAB debate continues

There's been two great posts on the CAB debate recently that were interesting. Jeremy Miller had an excellent post over the brouhaha, citing that he really isn't going to be building a better CAB but supports the new project we recently launched, SCSFContrib. I think Jeremy's excellent "Roll your own CAB" series is good, but you need to take it in context and not look at it as "how to replace CAB" but rather "how to learn what it takes to build CAB". Chris Holmes posted a response called Tools Are Not Evil from Oren's blog entry about CAB and EJB (in response to Glenn Block's enty, yeah you really do need a roadmap to follow this series of blog posts).

Oren's response to Chris Holmes post got me to write this entry. In it he made a statement that bugged me:

"you require SCSF to be effective with CAB"

Since this morning, it looks like he might have updated the entry saying he stands corrected on that statement but I wanted to highlight the difference between being efficient with a tool, and being effective with the technology the tool is supporting.

Long before SCSF appeared, I was groking CAB as I wanted to see if it was useful for my application or not and what it was all about. That took some time (as any new API does) and there were some concepts that were alien but after some pain and suffering I got through it. Then SCSF came along and it enabled me to be more efficient with CAB in that I no longer had to write my own controller, or implement an MVP pattern myself. This could be done by running a single recipe. Event the entire solution could be started for me with a short wizard, saving me a few hours I would have taken otherwise. Did it create things I don't need? Probably. There are a lot of services there that I simply don't use however I'm not stoked about it and ignore them (sometimes just deleteting them from the project afterwards).

The point is that SCSF made me more efficient in how I could leveage CAB, just like ReSharper makes me a more efficient developer when I do refactorings. Does it teach me why I need to extract an interface from a class? No, but it does it in less time than it would take manually. When I mentor people on refactoring, I teach them why we do the refactoring (using the old school manual approach, going step by step much like how the refactorings are documented in Martin Fowlers book). We talk about why we do it and what we're doing each step of the way. After doing a few this way, they're comfortable with what they're doing then we yank out ReSharper and accomplish 10 minutes of coding in 10 seconds and a few keystrokes. Had the person not known why they're doing the refactoring (and what it is) just right-clicking and selecting Refactor from the menu would mean nothing.

ReSharper (and other tools) make me a more efficient developer, but you still need to know the what and why behind the scenes in order to use the tools. I compare it to race car driving. You can give someone the best car on the planet, but if they just floor it they'll burn the engine out and any good driver worth his salt in any vehicle could drive circles around you. Same as development. I can code circles around guys that use wizards when they don't know what the wizard produces or why. Knowing what is happening behind the scenes and the reason behind it, makes using tools like ReSharper that much more value-added.

SCSF does for CAB what ReSharper does for C# in my world and I'll take anyone that knows what they're doing over guys with a big toolbox and no clue why they're using them anyday.

-

Priority is a sequence, not a single number!

This morning I opened up an email (and associated spreadsheet) that just made me cringe. Which of course made me blog about it, so here we are. Welcome to my world.

Time and time again I get a list of stories that a team has put together for me to either review, estimate, build, filter, whatever. Time and time again I keep seeing this:

Story Points Priority As a user I can ... 5 1 As an administrator I can ... 3 1 As an application I can ... 4 1 No. No. No!

Priority is not "make everything 1 so the team will do it". When you're standing in front of a task wall with dozens of tasks relating to various stories, which one do you pick? For me, I take on the tasks that are the highest priority based on what the user wants. If he wants Feature A to be first, then so be it and I grab the tasks related to Feature A.

However I can't do this (read: will not do this) when someone puts *everything* to be Priority 1. It's like asking someone to give more than 100%. You simply cannot do it. Priority is there to organize stories so the most important one gets done first. How you define "most important" is up to you, whether it's technical risk, business value, etc. and what value that gives you.

This is more like what priority should be:

Story Points Priority As a user I can ... 5 1 As an administrator I can ... 3 2 As an application I can ... 4 3 Another thing I see is priority like this:

Story Points Priority As a user I can ... 5 High As an administrator I can ... 3 Medium As an application I can ... 4 High Another no-no. I can't tell from all the "High" features what is the "Highest" one and we're basically back to everything being a 1 but now we're calling it "High". Unless you've had a mulitple core upgrade in your brain (or are someone like Hanselman, JP, or Ayende who don't sleep) you do things one at a time then move on. As developers and architects, we need to know what is the most important thing to start with based on what the business need is.

With customers everything is important, but for planning sake it just makes life easier to have a unique list of priorities rather than everything being #1. When all is said and done and I have to choose between 3 different #1s in a list, I'll pick one randomly based on how I feel that day. And that doesn't do your customer any good.

Okay, enough ranting this morning. I still haven't finished my first coffee and I still have a few dozen emails to go through.

-

Tree Surgeon has a new home... on CodePlex!

About a week or so ago Mike Roberts posted a note that he was no longer going to be working in the .NET world as the Java world was taking over at his company. Mike is the author of many blog posts on setting up a development tree in .NET and these blog posts spawned a tool called Tree Surgeon. As Mike was no longer going to be working in the .NET space, he threw out the gauntlet for someone to pickup the maintenance for the tool.

I picked it up as I think it's a great tool and can only improve with time. You can find the new home for Tree Surgeon here on CodePlex.

I've setup all the documentation the same as the original site along with putting out version 1.1.1 (the last release). You can grab it various flavours:

- TreeSurgeon-1.1.1-Setup.exe. Just click and install.

- TreeSurgeon-1.1.1.zip. Unzip and run.

- TreeSurgeon-1.1.1-Source.zip. Zip file of the source tree.

Source code is checked into CodePlex so you can grab the latest changesets from here.

There's plenty of ideas for Tree Surgeon in the coming year, so I encourage you to visit the Discussion Forums and talk about what you're interested in seeing and keeping track of (and voting on) new features and bugs via the Issue Tracker.

I've also changed the license for Tree Surgeon so it's now released under the Creative Commons Attribution-Share Alike 3.0 License (I'm not only a CodePlex junkie, I'm a CC one too).

-

Groking Rhino Mocks

If you've been in a cave for awhile, there's a uber-cool framework out there call Rhino Mocks. Mocks are tools that allow you to create mock implementations of custom objects, services, and even databases, to enable you to focus on testing what you're really interested in. After all, do you really want to be bogged down while your unit tests open up a communication channel to a web service that might be there, only to test a business entity that happens to need a value from the service?

Rhino is a very cool framework that Oren Eini (aka Ayende Rahien aka the busiest blogger in the universe next to Hanselman and ScottGu) put together. It recently hit version 3.0 and is still going strong. If you don't know anything about mocks or are interested in Rhino now is your opportunity. Oren has put together an hour long video screencast (his first) that walks through everything you need to know about mocks (using Rhino of course). I strongly recommend any geek worth his or her salt to sit down for an hour, grab some popcorn, and check out the screencast. You won't be disappointed.

You can download the screencast here (35mb, which includes the video and the source code for the demo). You'll need the Camtasia TSCC codec to watch the video which is available here. More info and a copy of Rhino itself can be found here.

Enjoy!

-

A refactoring that escapes me

We had a mini-code review on Friday for one of the projects. This is a standard thing that we're doing, code reviews basically every week taking no more than an hour (and hopefully half an hour once we get into the swing of things). Code reviews are something you do to check the sanity of what someone did. Really. You wrote some code 6 months ago and now is the time to have some fresh eyes looking at it and pointing out stupid things you did.

Like this.

Here's the method from the review:

1: protected virtual bool ValidateStateChangeToActive()

2: {3: if ((Job.Key == INVALID_VALUE) ||

4: (Category.Key == INVALID_VALUE) ||5: (StartDate == DateTime.MinValue) ||6: (EndDate == DateTime.MinValue) ||7: (Status.Key == INVALID_VALUE) ||8: (Entity.Key == INVALID_VALUE) ||9: (String.IsNullOrEmpty(ProjectManagerId.ToString()) || ProjectManagerId == INVALID_VALUE) ||10: String.IsNullOrEmpty(ClientName) ||11: String.IsNullOrEmpty(Title))14: {15: return false;

16: }17: return true;

18: }It's horrible code. It's smelly code. Unfortunately, it's code that I can't think of a better way to refactor? The business rule is that in order for this object (a Project) to become active (a project can be active or inactive) it needs to meet a set of criteria:

- It has to have a valid job number

- It has to have a valid category

- It has to have a valid start and end date (valid being non-null and in DateTime terms this means not DateTime.MinValue)

- It has to have a valid status

- It has to have a valid entity

- It has to have a valid non-empty project manager

- It has to have a client name

- It has to have a title

That's a lot of "has to have" but hey, I don't make up business rules. So now the dilemma is how to refactor this to not be ugly. Or is it really ugly?

The only thing that was I was tossing around in my brain was to use a State pattern to implement active and inactive state, but even with that I still have these validations to deal with when the state transition happens and I'm back at a big if statement. Sure, it could be broken up into smaller if statements, but that's really not doing anything except formatting (and perhaps readability?).

Anyone out there got a better suggestion?

-

Expand your horizons, get introduced to C# 3.0

Scott Guthrie has an excellent summary post of some key features in .NET 3.0 that's coming in the next release of Visual Studio (codename Orcas). His post is short, sweet, and shows how you do something today and how it can change in the future.

This covers automatic properties, object and collection initializers (all of which I've been really digging in my test environment). Even with mocks, writing test code is that much easier in 3.0 (or is it 3.5 now? I always loose track) and the syntax isn't as cryptic as LINQ (which I'm still wrapping my noggin' around).

Check out Scott's post here to get introduced to the new C# features.

-

What's in your OSS box?

Following on the heels of Jeremy Miller and JP, here's a list of my open source tools in my tool chest. My list may be surprising to some.

- Enterprise Libraries. Some people hate 'em and there's people that blast them for being "too big" but it works. Free logging, exception handling (via policies I dictate through an XML file), and other goodies. Version 3.0 adds some business validation framework and even more stuff.

- Composite Application UI Block (CAB). A library that provides a framework for building composite applications. It lets me modularize things and not worry about the plumbing to make things talk to each other (thanks to an easy to use event broker system). It also includes ObjectBuilder to boot which is a framework to build dependency injection systems.

- Smart Client Software Factory. Another framework (and collection of guidance packages) that jumpstarts building Smart Client applications. Basically provides a hunk of code I would normally have to write to locate services, load modules, and generally be a good Smart Client citizen.

- NAnt. Can't stand MSBuild and wouldn't give it the time of day. NAnt is my savior when I need to automate a quick task.

- NUnit. Again, MSTest just dosen't measure up when it comes to integration with my other tools and most everything is written these days with NUnit examples. Okay, so NUnit isn't as powerful as say MbUnit but I just love the classics.

- CruiseControl.NET. The ThoughtWorkers are awesome dudes of power and CC.NET is just plain simple (see my struggle with Team City recently).

- TestDriven.NET. A great tool that I can't live without.

- NCover/NCoverExplorer. I just love firing up TestDriven.NET with covage and seeing 100% in all my code. Where I'm lacking, it points it out easily and I just write a new test to get my coverage up.

- Subversion. I have a local copy of Subversion running so I can just do quick little spikes and maybe file the code away for a rainy day on an external drive.

- TortoiseSVN. And CVS I guess when I have to access CVS repositories out there in internet land. I'm still waiting for a TortoiseTFS.

- RhinoMocks. I started writing mocks last year and haven't looked back since. While it does take some going to set things up, if you start writing mocks and doing TDD with them you'll end up with a better looking system (rather than trying to mock things out after the fact). Rhino is the way to go for mocking IMHO.

- Notepad++. Lots of people have various notepad replacements, but I prefer this puppy.

- WinMerge. Great tool for doing diffs of source code or entire directories.

This is what's in my toolbox today and I use on a daily basis (and there's probably more but I haven't had enough Jolt tonight to bring them out from the depths of my grey matter). I've glossed over and checked out various other tools like NHibernate, Windsor, iBatis, and even Boo but they're not something I use all the time.

While I don't have as many as the boys, I think I have what I need right now. What's surprising looking at the list is that some of my stuff is Microsoft which just flys in the face of any comments from people that "We only use Microsoft" means you *can* use OSS tools even if they're from the evil empire. Even with the MS list of items I use, I'm covered with things like dependency injection and separation of concern although the MS tools don't fare nearly as well as say Windsor or StructureMap, they still do what I need them to.

-

Tuesday Night Downloads

Two downloads I thought I would toss out there for your feed readers to consume.

Visual Studio 2005 Service Pack 1 Update for Windows Vista

During the development of Windows Vista, several key investments were made to vastly improve overall quality, security, and reliability from previous versions of Windows. While we have made tremendous investments in Windows Vista to ensure backwards compatibility, some of the system enhancements, such as User Account Control, changes to the networking stack, and the new graphics model, make Windows Vista behave differently from previous versions of Windows. These investments impact Visual Studio 2005. The Visual Studio 2005 Service Pack 1 Update for Windows Vista addresses areas of Visual Studio impacted by Vista enhancements.

Sandcastle - March 2007 Community Technology Preview (CTP)

Sandcastle produces accurate, MSDN style, comprehensive documentation by reflecting over the source assemblies and optionally integrating XML Documentation Comments. No idea what's changed in this CTP but now that NDoc is dead and buried, like Obi-Wan, this is our only hope.

-

NetTiers gets a Wiki (a real one)

One of my more favorite tools is NetTiers. Calling a template a tool might be a stretch but it's my primary reason for using CodeSmith. NetTiers is basically a set of CodeSmith templates that, when pointed at a database, gives you a nicely separated layered set of .NET projects for accessing your data. Basically an auto-generated data access layer. One class for each table in your schema, one property for each column, methods for stored procs, wrappers for accessing aggregates like getting all records (by primary or foreign keys) and other good stuff. I've used it for my data access layer in a few projects and even used the autogenerated website either as a starter for sites or entire sites where all I needed was a set of pages for data maintenance.

Documentation is always a bear for any tool (free or otherwise) and NetTiers is no different. The templates generate 10,000 lines of code, hundreds of classes (depending on how many tables you have) and it's a lot to take in. The forums they have are good but it's hard to find information (even with search) and information quickly becomes out of date without any care and feeding. Wikis can help (but not solve) that by turning to the community for contributions. I'm a firm believer that it's easier to have 10 monkeys write 10 pages than 1 person writing 100 pages of documentation (and we all know who the monkey is here).

So now the NetTiers team has setup real wiki here just for that purpose. Signup for an account and start contributing if that's your thing. This is an improvement over the previous "wiki" they had setup but that effort wasn't quite optimal. The wiki was closed to the public and only contained a handful of canned pages. In any case, the new one is out in the open and ready to go. So if you're a consumer or contributor (or both like me) check it out!