Contents tagged with Agile

-

Calgary Agile Project Leadership Network (APLN) 2007/2008 Kick-Off

Calgary APLN is a local chapter of the Agile Project Leadership Network (APLN). The APLN is a non-profit organization that looks to enable and cultivate great project leaders. I've worked with Janice before and Mike is a well known person in the Agile community and an awesome presenter so check this event out.

Description: The Calgary chapter of the Agile Project Leadership Network (APLN) invites you to the APLN 2007/2008 Season Kick-Off Meeting.

Guest Speakers: Janice Aston and Mike Griffiths

Date: Wednesday, October 17th, 2007

Time: 12:00pm - 1:00pm

Location: Fifth Avenue Place Conference Room, Suite 202, 420 - 2 St. S.W.

Come and experience Agile planning in action at the Calgary APLN season kick-off meeting. If you are interested in how to run effective agile projects here is your opportunity to help choose the presentation topics for this season's talks and workshops.Agenda

-

Welcome and overview of Calgary APLN group

-

Report on new initiatives from the APLN

-

Brainstorming of topics for 2007/2008 season

-

Affinity grouping and ranking of topics

-

Top 5 list identified

About the Speakers

Janice Aston has over 16 years of project management experience with an emphasis on delivering business value. She is passionate about building high performing teams focused on continuous improvement. Janice has a proven track record delivering on project commitments with a heart for leadership and people. She has recently founded Agile Perspective Inc. specializing in creative collaboration.

Mike Griffiths is an independent project manager and trainer with over 20 years of IT experience. He is active in both the agile and traditional project management communities and serves on the board of the APLN & Agile Alliance, and teaches courses for the PMI. Mike founded the Calgary chapter of the APLN in 2006 and maintains the Agile Leadership site www.LeadingAnswers.com .

Please visit www.CalgaryAPLN.org for more details and to sign up for this event. -

-

Alt.NET, stop talking just do it!

Hopefully the last of my Alt.NET soapbox posts for the day. There was a post by Colin Ramsay that while was quite negative about the whole Alt.NET thing (it was called Abandon Alt.NET) but it contained a single nugget that I thought was just right for the moment:

If they really wanted to change things then they should be writing about their techniques in detail, coming up with introductory guides to DDD, TDD, mocking, creating screencasts, or giving talks at mainstream conferences, or producing tools to make the level of entry to these technologies lower than it is.

I argue we've been doing this. Just visit the blogs at CodeBetter, Weblogs, and ThoughtWorks (these are just three aggregates that collect up a bunch of musings from Alt.NET people, there are others as well as one-offs). There's noise to the signal, so you have to sift through it but the good stuff is there if you look hard enough.

I totally agree with Justice (and others) in what he said on the mailing list:

Looking at this from a perspective of the conference participants being the developers and the general .NET community being the "client" in this case, how much value is the "client" going to derive from either:

a) what our mission statement is

b) what we choose to name this group?

in comparison to actual involvement with devs, recaps of sessions, evangelism efforts?So just do it. Enough with the name bashing, mission identity, who is and who isn't, and all that fluff. No fluff. Just code. Just go out and write. Blog. Present. Mentor. Learn. And if you're already doing that, you're ahead of the game.

-

!Alt.NET

I live in a Alt.* world. Have been all my life. I prefer the alternate movies over mainstream. I would rather sit and watch an art film from 1930s German cinema than the latest slap-fest from Ben Stiller. I prefer alternate music over mainstream. Give me Loreena McKennit or Mike Oldfield over Britney Spears and Justin Timberlake anyday. So it's only natural I'm sucked toward the Alt.NET way of software development.

Back before I was into this software thing I was an artist. I jumped from graphic design to commercial advertising. During my 7 year itch I spent a good part of it in comics, and more precisely the alternate comics. I never tried out for Marvel or DC (although a Marvel guy who shall remain nameless liked my stuff and invited me down to New York to talk to them) so the alternate scene for me was Dark Horse, Vertigo, and Image. These were the little guys. The guys who preferred glossy paper over stock comic newsprint. The guys who were true to reality and weren't afraid to show murder, death, kill in the pages. I did a comic once about drug dealers in Bogota, Columbia (and the band of 5 guys [think A-Team but cooler] who would bring them down). The writer put something simple on the page like "the drug industry in Columbia was everywhere". If I was at Marvel or DC, one might draw the factories and lots of trucks, people packaging up the drugs, and shipping them off to the Americas. However this was Alt.Comic land and we told it like it was. I thought showing drug addicts (including one guy shooting up in an alley on one panel) mixed in with the tourists was the way to go. It was deemed a little racy and I was asked to tone it down, but it wasn't censorship and in the end I got to express what I really intended to do. I felt like I had made a difference and wasn't going to let the mainstream way of doing things cloud my judgement.

Alright, back to software development. I still don't know if I'll call myself an Alt.NETter simply because I'm not sure it's clear what that means. Any Alt.* movement in the world has it's basis in reality. Alternate art, movies, and music were created as a way to exercise expression of freedom, not just to be different. What is it that we look at in the "mainstream" way of software development that bothers us (enough to create an Alt.NET way). This really doesn't have anything to do with Microsoft does it? However many people have tagged MS as being the "evil empire" and using Microsoft tools is the wrong way, Alt.NET is the right way. Even the name seems to resonate against .NET and way Microsoft does things.

To me, Alt.NET means doing things differently than some whitepaper or robotic manager tells you how to do it because that's how it's been done for years. Alt.NET is any deviation from the "norm" when that norm doesn't make sense anymore. Maybe it made sense to shuttle fully blown DataSets across the wire at the time, because the developer who wrote it didn't know any better. However in time, as any domain evolves, you understand more and more about the problem and come to a realization you only need these two pieces of information, not the whole bucket. And a simple DTO or ResultObject will do. So you change. You refactor to a better place. And you become an Alt.NETter.

It's not about doing things differently for difference sake. You see a flaw in something and want to correct it (hopefully for the better). Perhaps BizTalk was chosen as a tool when something much smaller and easier to manage would have worked (even a RYO approach). Dozens of transactions a day instead of thousands and no monitoring required. If there's pain and suffering in using a tool or technology, don't use it. When you go to the doctor and say "Doc, it hurts when I do this" and he replies with "Don't do that" that's what we're talking about here. If it pains you to go in and maintain something because of the way it was built, then there's a first order problem here in how something was built (but not necessarily the tools used to do the job). That's my indicator that something isn't right and there must be a better way.

As software artists we all make decisions. We have to. Sometimes we make the right ones, sometimes not so right. However it is our responsibility if we choose to write good software, to make the right decisions at the right time. Picking a tool because it's cool doesn't make it right. Tomorrow that tool might be the worst piece of crap on the planet because it wasn't built right in the first place. Software is an art and a science. There's principles we apply but we have to apply them with some knowledge and foresight to them. Even applying the principles from the Agile Manifesto require the right context. Individuals and interactions over process and tools. We stay true to these principles but that doesn't mean we abandon the others. I use Scrum everyday and pick the right tools for the right job where possible. It's a balance and not something easy to maintain. If all you do is stick your head down and code without looking around to see what's going on around you, you're missing the point. Like Scott Hanselman said, you're a 501 developer and don't really care about what you're doing. You might as well be replaced with a well written script. For the rest of us, we have a passion about this industry and want to better it. This means going out and telling everyone about new tools and techniques, demonstrating good ways to use them, explaining what new concepts like DDD and BDD mean, and most of all being pragmatic about it and accepting criticism where we can improve ourselves and the things we do.

I suppose you can call it alternative software development. I think it's software development with an intelligent and pragmatic approach. Choose the right tool for the right job at the right time and be open and adaptable to change.

-

Quotes from the ALT.NET conference

Unfortunately I couldn't make it out with my Agile folks to the ALT.NET conference but from the blogs, various emails and IM's and the photos it sure looked like a blast. 97 geeks (Wendy, Justice and myself couldn't make it but there were probably others) got together and partied only like geeks can do. While I wasn't there, here are some quotes that came out of the conference. Some to think deeply about, others to just... well, you decide. Remember to use this knowledge for good and not evil.

"Alt.net is in the eye of the beholder"

"Oh I spelled beer wrong" -Dave

"Savvy?" -Scott Hanselman

"Scott, it's Morts like you..." -Scott Guthrie to Scott Bellware

"Programmers Gone Wild"

"There's the butterflies: then there's the HORNETS" -B. Pettichord

"I think 'grokkable' is more soluble then solubility" -Roy Osherove

"MVC is that thing that wraps URLs"

You can view (and contribute!) the altnetconf Flickr pool here. There's also a Yahoo group setup here if you want to carry on with the discussions since Alt.net isn't only about being at a conference.

-

Scrum for Team System Tips

As I'm staring at my blank Team System setup waiting for the system to work again, I thought I would share a few Scrum for Team System tips with you. SFTS has done a pretty good job for us (much better than the stock templates Team System comes with) but it does have it's issues and problems (like the fact that PBIs are expressed in days and SBIs are expressed in hours, totally messes up with the Scrum concept and makes PMs try to calculate "hours per point"). I'm really digging Mingle though and will be blogging more on that as we're piloting it for a project right now and I'm considering setting up a public one for all of my projects (it's just way simpler than Rally, VersionOne or any other Agile story management tool on the planet, hands down).

So here's a few tips that I’ve picked up using Scrum for Team System that might be helpful to know (should you or any of your Agile force be put in this position):- When a sprint ends, all outstanding PBIs should be moved to the next sprint and the sprint marked as done.

- The amount of work done in the sprint (SBIs completed) gives you the capacity (velocity) for the team for the next sprint.

- Only allocate one sprints-worth of PBIs to a sprint when planning and try to estimate better based on previous sprint data.

- When the customer decides the product is good enough for shipping have a release sprint where the goal is to "mop up" bugs and polish the product ready for shipping. This would include new PBIs like:

- Fix outstanding bugs

- Create documentation

- Package/create MSIs

- Fix outstanding bugs

- Never attach new SBIs to previously closed PBIs. If the customer changes his mind about the way something is implemented, it is recorded as a new PBI because the requirement has changed.

Definitions:

PBI: Product Backlog Item, can be functional requirements, non-functional requirements, and issues. Comparable to a User Story, but might be higher level than that (like a Theme, depends on how you do Scrum)

SBI: Sprint Backlog Item, tasks to turn Product Backlog Items into working product functionality and support a Product Backlog requirement for the current Sprint.

- When a sprint ends, all outstanding PBIs should be moved to the next sprint and the sprint marked as done.

-

Nothin but .NET - Tips and Tricks - Day 3

Coffee, coffee, coffee. Oh we need coffee. Okay, got it now we're ready. It's been a crazy week and it's getting crazier. JP is coding at about 8,000 words and using about 40,000 ReSharper shortcuts per minute now. He's also talking at about 10,000 words a minute. If you blur your eyes when looking at him, he begins starts to look like Neo in the Matrix. There is no mouse.

Service Layers are Tasks

I really like this paradigm of naming the service layer classes. Rather than having something like CustomerService.GetAllCustomers(), it's named CustomerTasks.GetAllCustomers(). I've always used the ServiceXXX naming strategy but it makes more sense to name things as tasks because in reality, they are tasks. Tasks in the service layer to serve up information to external consumers. It's about readability so choose whatever works for you.

Recording Ordered Mocks

We were working on the database today (gasp) but started mocking out a data gateway. I found it amusing that we couldn't record an ordered mock (but there was a way).

Hey Oren, check this out (in case you didn't know). This block fails:

54 [Test]

55 public void Should_be_able_to_get_a_datatable_from_the_database_ordered_failure()

56 {

57 using (mockery.Ordered())

58 {

59 using (mockery.Record())

60 {

61 Expect.Call(mockFactory.Create()).Return(mockConnection);

62 Expect.Call(mockConnection.CreateCommandForDynamicSql("Blah")).Return(mockCommand);

63 SetupResult.For(mockCommand.ExecuteReader()).Return(mockReader);

64 mockConnection.Dispose();

65 }

66

67 using (mockery.Playback())

68 {

69 DataTable result = CreateSUT().GetADataTableUsing("Blah");

70 Assert.IsNotNull(result);

71 }

72 }

73 }

The error is:

[failure] DBGatewayTest.Setup.Should_be_able_to_get_a_datatable_from_the_database_ordered_failure.TearDown

TestCase 'DBGatewayTest.Setup.Should_be_able_to_get_a_datatable_from_the_database_ordered_failure.TearDown'

failed: Can't start replaying because Ordered or Unordered properties were call and not yet disposed.

System.InvalidOperationException

Message: Can't start replaying because Ordered or Unordered properties were call and not yet disposed.

Source: Rhino.MocksIn this case, the mock objects were created from interfaces that inherited from IDisposable. However it was a simple change to put the ordered call inside the record (because we don't care about ordering on playback). This slight change now works:

33 [Test]

34 public void Should_be_able_to_get_a_datatable_from_the_database()

35 {

36 using (mockery.Record())

37 {

38 using (mockery.Ordered())

39 {

40 Expect.Call(mockFactory.Create()).Return(mockConnection);

41 Expect.Call(mockConnection.CreateCommandForDynamicSql("Blah")).Return(mockCommand);

42 SetupResult.For(mockCommand.ExecuteReader()).Return(mockReader);

43 mockConnection.Dispose();

44 }

45 }

46

47 using (mockery.Playback())

48 {

49 DataTable result = CreateSUT().GetADataTableUsing("Blah");

50 Assert.IsNotNull(result);

51 }

52 }

Just in case you ever needed to do have run into this problem one day.

More Nothin

JP is doing this 5-day intensive course that stretches people to think outside the box of how they typically program and delve into realms they have potentially not event thought about yet, about one per month. For the next little while (he's a busy dude) he's going to be travelin' dude. In October he'll be in New York City. In September he'll be in London, England and other locations are booked up into the new year.

If you want to find out more details about the course itself, check out the details here and be sure to get on board with the master.

-

Being descriptive in code when building objects

As we're working through the course, I've been struggling with some constructors of objects we create. For example, in the first couple of days the team was writing unit tests around creating video game objects. This would be done by specifying a whack of parameters in the constructor.

9 [Test]

10 public void Should_create_video_game_based_on_specification_using_classes()

11 {

12 VideoGame game = new VideoGame("Gears of War",

13 2006,

14 Publisher.Activision,

15 GameConsole.Xbox360,

16 Genre.Action);

17

18 Assert.AreEqual(game.GameConsole, GameConsole.Xbox360);

19 }

This is pretty typical as we want to construct an object that's fully qualified and there's two options to do this. Either via a constructor or by setting properties on an object after the fact. Neither of these really appeal to me so we talked about using the Builder pattern along with being fluent in writing a DSL to create domain objects.

For the same test above to create a video game domain object, this reads much better to me than to parameterize my constructors (which means I might also have to chain my constructors if I don't want to break code later):

36 [Test]

37 public void Should_create_video_game_based_on_specification_using_builder()

38 {

39 VideoGame game = VideoGameBuilder.

40 StartRecording().

41 CreateGame("Gears of War").

42 ManufacturedBy(Publisher.Activision).

43 CreatedInYear(2006).

44 TargetedForConsole(GameConsole.Xbox360).

45 InGenre(Genre.Action).

46 Finish();

47

48 Assert.AreEqual(game.GameConsole, GameConsole.Xbox360);

49 }

Agree or disagree? The nice thing about this is I don't have to specify everything here. For example I could create a video game with just a title and publisher like this:

34 VideoGame game = VideoGameBuilder.

35 StartRecording().

36 CreateGame("Gears of War").

37 ManufacturedBy(Publisher.Activision).

38 Finish();

This avoids the problem that I have to do when using constructors in that I don't have to overload the constructor.

Here's the builder class that spits out our VideoGame object. It's nothing more than a bunch of methods that return a VideoGameBuilder and the Finish() method that returns our object created with all it's values.

44 internal class VideoGameBuilder

45 {

46 private VideoGame gameToBuild;

47

48 public VideoGameBuilder()

49 {

50 gameToBuild = new VideoGame();

51 }

52

53 public static VideoGameBuilder StartRecording()

54 {

55 return new VideoGameBuilder();

56 }

57

58 public VideoGameBuilder CreateGame(string title)

59 {

60 gameToBuild.Name = title;

61 return this;

62 }

63

64 public VideoGameBuilder ManufacturedBy(Publisher manufacturer)

65 {

66 gameToBuild.Publisher = manufacturer;

67 return this;

68 }

69

70 public VideoGameBuilder CreatedInYear(int year)

71 {

72 gameToBuild.YearPublished = year;

73 return this;

74 }

75

76 public VideoGameBuilder TargetedForConsole(GameConsole console)

77 {

78 gameToBuild.GameConsole = console;

79 return this;

80 }

81

82 public VideoGameBuilder InGenre(Genre genre)

83 {

84 gameToBuild.Genre = genre;

85 return this;

86 }

87

88 public VideoGame Finish()

89 {

90 return this.gameToBuild;

91 }

92 }

This makes my tests read much better but even in say a presenter or service layer, I know exactly what my domain is creating for me and I think overall it's a better place to be for readability and maintainability.

This isn't anything new (but it's new to me and maybe to my readers). You can check out the start of it via Martin Fowler's post on fluent interfaces, and the followup post early this year on ExpressionBuilder which matches what the VideoGameBuilder class is doing above. And of course Rhino uses this type of syntax. I guess if I'm 6 months behind Martin Fowler in my thinkings, it's not a bad place to be.

-

Nothing but .NET - Tips and Tricks - Day 2

It's the second day here with JP and crew. Yes, posting on the 4th day isn't quite right is it, but 12 hours locked up in a room with JP is enough to drive anyone to madness. Really though, it's just completely draining and for me I have an hour transit ride and a 45 minute drive to get home so needless to say, sometimes I get home and just crash. Anyways, expect days 3 and 4 to follow later today.

Okay so back to the course. After the gangs morning trip to Starbucks we're good to go for the day (and it's going to be a long one).

Decorators vs. Proxies

Small tip for those using these patterns, decorators must forward their calls onto the objects they're decorating, proxies do not have to. So if you have some kind of proxy (say to a web service or some security service) the proxy can decide whether or not to forward the call onto the system. When using decorators, all calls get forwarded on so decorators need to be really dumb and the consumer needs to know that whatever it sends the decorator, it'll get passed down the line no matter what. Good to know.

ReSharper Goodness

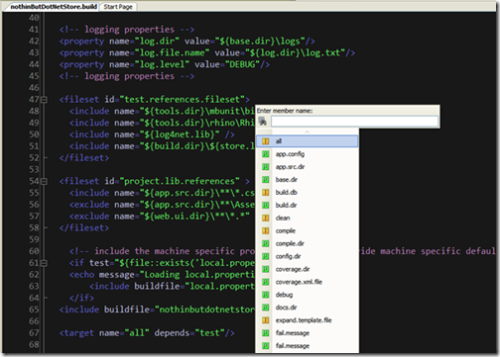

I'm always happy seeing new stuff with ReSharper and I don't know why I didn't get this earlier. NAnt support in Visual Studio with ReSharper. Oh joy, oh bliss. I was struggling with NAntExplorer, which never really worked. I never realized ReSharper provides NAnt support, until now. Load up your build script and hit Ctrl+F12 to see all the items, or do a rename of a task or property. Awesome!

Builds and Local Properties

A new tip I picked up is using local property files and merging them with build scripts via NAnt. Traditionally it's always been a challenge when dealing with individual developers machines and setups. Everyone is different as to what tools they have installed, where things are installed to, and even what folders they work in. A clever trick I've done (at least I thought it was clever) was to create separate environment configurations with a naming standard, then using post-build events (NAnt would have worked as well) and copying them as the real app.config or web.config files. This is all fine and dandy, but still has limitations. I think I've found a utopia with local property files now.

So you have your build script but each developer has a different setup for their local database. Do you create a separate file for each user? No, that would be a maintenance nightmare. Do you force everyone to use the same structure and paths? No, that would restrict creativity and potentially require existing setups to change. The answer my friend is blowing in the wind, and that wind is local property files merged into build scripts to produce dynamic configurations.

Typically you would create say an app.config file that would contain say your connection string to a database. Even with everyone on-board and using a connection string like "localhost", you can't ensure everyone has the tools in the same place or whatever. This is where a local property file comes into play. For your project create a file called something like local.properties.xml.template. It can contain all the settings you're interested in localizing for each developer and might go like this:

1 <?xml version="1.0"?>

2 <properties>

3 <property name="sql.tools.path" value="C:\program files\microsoft sql server\90\tools\binn\" />

4 <property name="osql.connectionstring" value="-E"/>

5 <property name="osql.exe" value="${sql.tools.path}\osql.exe" />

6 <property name="initial.catalog" value="NothinButDotNetStore"/>

7 <property name="config.connectionstring" value="data source=(local);Integrated Security=SSPI;Initial Catalog=${initial.catalog}"/>

8 <property name="devenv.dir" value="C:\program files (x86)\microsoft visual studio 8\Common7\IDE"/>

9 <property name="asp.net.account" value="NT Authority\Network Service"/>

10 <property name="database.provider" value="System.Data.SqlClient" />

11 <property name="database.path" value="C:\development\databases" />

12 <property name="system.root" value="C:\windows\"/>

13 </properties>

In this case I have information like where my tools are located, what account to use for security, and what my connection string is. Take the template file and rename it to local.properties.xml then customize it your own environment. This file does not get checked in (the template does, but not the customized file).

Then inside your NAnt build script you'll read this file in and merge the properties from this file into the main build script. Any properties with the same name will be overridden and used instead of what's in your build script so this gives you the opportunity to create default values (for example, default database name). If the developer doesn't provide a value the default will be used. The NAnt task is simple as it just does a check to see if the local properties file is there and then uses it:

60 <!-- include the machine specific properties file to override machine specific defaults -->

61 <if test="${file::exists('local.properties.xml')}">

62 <echo message="Loading local.properties.xml" />

63 <include buildfile="local.properties.xml" />

64 </if>

That's it. Now each developer can have anything setup his own way. For example I only have SQL Express installed but the others have SQL Server 2005 installed. We're all using the same build scripts because my local properties file contains the path to osql.exe that got installed with SQL Express and my connection string connects to MACHINENAME\SQLEXPRESS (the default instance name).

Finally, these values are used to spit out app.config or web.config files. The key thing here is that we don't have an app.config file inside the solution. Only the a template is there which is merged with the properties from the build script to generate dynamic config files that are specific to each environment (and again, not checked in to the build).

BTW, the whole build process, build scripts and differences from deployments could be an entire blog post so I don't think I'm finished with this topic just yet.

Notepad++

I'm a huge fan of Notepad++ and use it all the time. I have it configured on my system to be the default editor, default viewer for source code in HTML pages, and well... the default everything. I was a little surprised when our build ran the MbUnit launched Notepad. Nowhere I could find a place to change this and I'm told that it's using the default editor. That's a little odd to me because Notepad++ is my default editor. So maybe someone has info out there as to how to get it to launch my editor instead of Notepad? Either that or I need to physically replace Notepad.exe with Notepad++.exe (which can be done, instructions on the NP++ site).

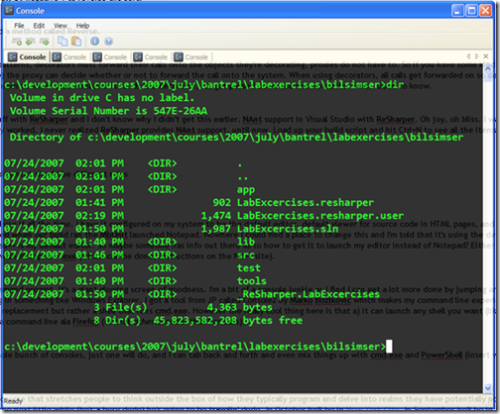

New Cool Tool of the Day

Console2. When I launch my command prompt, it's a giant glowing screen of goodness. I'm a bit of a console junkie as I find I can get a lot more done by jumping around in the console rather than hunting and pecking in something like Windows Explorer. I got a tool from JP called Console2 by Marko Bozikovic which makes my command line experience that much better. It's not really a cmd.exe replacement but rather a shell that runs cmd.exe. However the really cool thing here is that a) it can launch any shell you want (like PowerShell) b) supports transparency (even under Windows XP) and c) it has a tabbed interface. It's like a command line ala Firefox (or that other browser that has tabs).

Now I don't have to launch a whole bunch of consoles, just one will do, and I can tab back and forth and even mix things up with cmd.exe and PowerShell (insert whatever shell you like here as well).

Wrap up

Another draining day but full of good things to inject in your head. I can't say enough praise for this course, although things are moving along much slower than I would like them to, it's still a great experience and I highly recommend it for everyone, even seasoned pros.

As I mentioned before, I'm trying to get away from the mouse. I have always found the mouse to be a problem. It's the context switching from 1. take hand away from keyboard 2. move hand to mouse 3. move mouse 4. move hand back to keyboard. Bleh. Yesterday was a mostly-keyboard day, today I'm going commando with all keyboard as I have my mouse locked up and hopefully won't need it at all.

More Nothin

JP is doing this 5-day intensive course that stretches people to think outside the box of how they typically program and delve into realms they have potentially not event thought about yet, about one per month. For the next little while (he's a busy dude) he's going to be travelin' dude. In October he'll be in New York City. In September he'll be in London, England and other locations are booked up into the new year.

If you want to find out more details about the course itself, check out the details here and be sure to get on board with the master.

-

Nothin but .NET course - Tips and Tricks - Day 1

I'm currently popping in and out of Jean-Paul Boodhoo's Nothin but .NET course for the week and having a blast. We brought JP in-house to the company I'm at right now for his .NET training course, which is arguably probably the best .NET course out there. Period.

I don't claim to be an expert (at least I hope I've never claimed that) and I'm always looking for ways to improve myself and add new things to my gray matter that I can hopefully recall and use later. Call them best practices, call them tips and tricks, call them rules to live by. Over the course of the week I'll be blogging each day about what's going on so you can live vicariously through me (but you really need to go on the course to soak in all of JP and his .NET goodness).

So here with go with the first round of tips:

File -> New -> Visual Studio Solution

This is a cool thing that I wasn't aware of (and makes me one step closer to not using the mouse, which is a good thing). First, we have a basic empty Visual Studio .sln file. This is our template for new solutions. Then create new registry file (VisualStudioHack.reg or whatever you want to call it) with these contents:

Windows Registry Editor Version 5.00

[HKEY_CLASSES_ROOT\.sln\ShellNew]

"FileName"="Visual Studio Solution.sln"Double-click the .reg file and merge the changes into your registry. The result is that you now will have a new option in your File -> New menu called "Microsoft Visual Studio Solution". This is based on your template (the empty .sln file) that you provide so you can put whatever you want in here, but it's best to just snag the empty Visual Studio Solution template that comes with Visual Studio. Very handy when you just need a new solution to start with and don't want to start up Visual Studio and navigate through all the menus to do this.

MbUnit

MbUnit rocks over NUnit. My first exposure to the row test and while you can abuse the feature, it really helps cut down writing a ton of tests or (worse) one test with a lot of entries.

In NUnit let's say I have a test like this:

35 [Test]

36 public void ShouldBeAbleToAddTwoPositiveNumbers()

37 {

38 int firstNumber = 2;

39 int secondNumber = 2;

40 Calculator calculator = new Calculator();

41 Assert.AreEqual(firstNumber + secondNumber, calculator.Add(firstNumber, secondNumber));

42 }

And I need to test boundary conditions (a typical thing). So I've got two options. First option is to write one test per condition. The second is to write a single test with multiple asserts. Neither is really appealing. Having lots of little tests is nice, but a bit of a bear to maintain. Having a single test with lots of asserts means I have to re-organize my test (hard coding values) and do something like this in order to tell which test failed:

33 [Test]

34 public void ShouldBeAbleToAddTwoPositiveNumbers()

35 {

36 Calculator calculator = new Calculator();

37 Assert.AreEqual(2 + 2, calculator.Add(2, 2), "could not add positive numbers");

38 Assert.AreEqual(10 + -10, calculator.Add(10, -10), "could not add negative number");

39 Assert.AreEqual(123432 + 374234, calculator.Add(123432, 374234), "could not add large numbers");

40 }

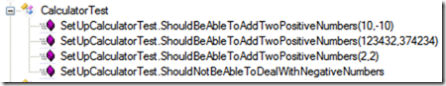

Or something like, but you get the idea. Tests become ugly looking and they feel oogly to maintain. Enter MbUnit and the RowTest. The test above becomes parameterized that looks like this:

28 [RowTest]

29 public void ShouldBeAbleToAddTwoPositiveNumbers(int firstNumber, int secondNumber)

30 {

31 Assert.AreEqual(firstNumber + secondNumber, calculator.Add(firstNumber, secondNumber));

32 }

Now I can simply add more Row attributes, passing in the various boundary conditions I want to check like so:

23 [Row(2,2)]

24 [Row(10, -10)]

25 [Row(123432, 374234)]

26 [RowTest]

27 public void ShouldBeAbleToAddTwoPositiveNumbers(int firstNumber, int secondNumber)

28 {

29 Assert.AreEqual(firstNumber + secondNumber, calculator.Add(firstNumber, secondNumber));

30 }

That's cool (and almost enough to convince me to switch) but what's uber cool about this? In the MbUnit GUI runner, it actually looks like separate tests and if a Row fails on me, I know exactly what it was. It's a failing test out of a set rather than a single test with one line out of many asserts.

As with any tool, you can abuse this so don't go overboard. I think for boundary conditions and anywhere your tests begin to look crazy, this is an awesome option. I haven't even scratched the surface with MbUnit and it's database integration (I know, databases in unit tests?) but at some point you have to do integration testing. What better way to do it than with unit tests. More on that in another blog.

Subject Under Test

This term was coined (at least my first exposure to it) was from Gerard Meszaros excellent book xUnit Testing Patterns and he uses it throughout. It makes sense as any unit test is going to be testing a subject so therefore we call it the Subject Under Test. One nomenclature that JP is using in his tests is this:

15 private ICalculator calculator;

16

17 [SetUp]

18 public void SetUpCalculatorTest()

19 {

20 calculator = CreateSUT();

21 }

22

23 private ICalculator CreateSUT()

24 {

25 return new Calculator();

26 }

So basically every unit test has a method called CreateSUT() (where appropriate) which creates an object of whatever type you need and returns it. I'm not sure this replaces the ObjectMother pattern that I've been using (and it's really not a pattern but more of a naming convention) but again, it's simple and easy to read. A nice little tidbit you pick up.

In doing this, my mad ReSharper skills got the best of me. Normally I would start with the CreateSUT method, which in this case returns an ICalculator by instantiating a new Calculator class. Of course there's no classes or interfaces by this name so there's two options here. One is to write your test and worry about the creation later, the other is to quickly create the implemenation. At some point you're going to have to create it anyways in order to compile, but I like to leave that until the last step.

Under ReSharper 2.x you could write your test line by writing CreateSUT() then press CTRL+ALT+V (introduce variable). However since ReSharper 2.5 (and it's still there in 3.x) you can't do this. ReSharper can't create the ICalculator instance (in memory) in order to walk through the method table (which would give you intellisense). So the simple thing is to write the CreateSUT() method and just whack ALT+ENTER a few times to create the class and interface.

Thread safe initialization using a delegate

I have to say that I never looked into how to initialize event handlers in a thread-safe way. I've never had to do it in the past (I don't work with events and delegates a lot) but this is a great tip. If you need to initialize an event handler but do it in a thread-safe way here you go:

private EventHandler<CustomTimerElapsedEventArgs> subscribers = delegate { };

It's the simple things in life that give me a warm and fuzzy each day.

Event Aggregators and Declarative Events

We spent the better part of the day looking at events, delegates, aggregators and whatnot. This is apparently new to the course that he's just added and hey, that's what Agile is about. Adapting to change.

Anyways, as we dug into it I realized how little I knew about events and how dependent I was on the framework to help me with those object.EventName += ... snippets I would always write. Oh how wrong that was as we got into loosely coupled event handlers (all done without dynamic proxies, which comes later). It's pretty slick as you can completely decouple your handlers and this is a good thing. For example, here's a simple test that just creates an aggregator, registers a subscriber, and checks to see if the event fired.

First the test:

25 [Test]

26 public void ShouldBeAbleToRegisterASubscriberToANamedEvent()

27 {

28 IEventAggregator aggregator = CreateSUT();

29 aggregator.RegisterSubscriber<EventArgs>("SomethingHappened", SomeHandler);

30 eventHandlerList["SomethingHappened"].DynamicInvoke(this, EventArgs.Empty);

31 Assert.IsTrue(eventWasFired);

32 }

Here's the delegate that will handle firing the event:

58 private void SomeHandler(object sender, EventArgs e)

59 {

60 eventWasFired = true;

61 }

And here's part of the aggregator class which simply manages subscribers in an EventHandlerList:

7 public class EventAggregator : IEventAggregator

8 {

9 private EventHandlerList allSubscribers;

10

11 public EventAggregator(EventHandlerList allSubscribers)

12 {

13 this.allSubscribers = allSubscribers;

14 }

15

16 public void RegisterSubscriber<ArgType>(string eventName, EventHandler<ArgType> handler)

17 where ArgType : EventArgs

18 {

19 allSubscribers.AddHandler(eventName, handler);

20 }

21

22 public void RaiseEvent<ArgType>(string eventName, object sender, ArgType empty) where ArgType : EventArgs

23 {

24 EventHandler<ArgType> handler = (EventHandler<ArgType>) allSubscribers[eventName];

25 handler(sender, empty);

26 }

27

28 public void RegisterEventOnObject<ArgType>(string globalEventName, object source, string objectEventName)

29 where ArgType : EventArgs

30 {

31 }

32 }

Again, basic stuff but lets you decouple your events and handlers so they don't have intimate knowledge of each other and this is a good thing.

A very good thing.

BTW, as we extended this we sort of built CAB-like functionality on the first day and in a few hours (only event publishers and subscribers though). We just added two attribute classes to handle publishers and subscribers (rather than subscribing explicitly). It wasn't anywhere near as complete as Jeremy Miller's excellent Build your own CAB series, but none-the-less it was a simple approach to a common problem and for my puny brain, I like simple.

It was fun and appropriate as the team is using CAB so the guys in the room are probably more positioned to understand CAB's EventBroker now.

Other Goodies and Tidbits

JP uses a nifty macro (that I think I'll abscond and try out myself) where he types the test name in plain English but with spaces like so:

Should be able to respond to events from framework timer

Then he highlights the text and runs the macro which replaces all spaces with underscores:

Should_be_able_to_respond_to_events_from_framework_timer

I'm indifferent about underscores in method names and it's a no-no in production code, but for test code I think I can live with it. I reads well and looks good on the screen. Using a little scripting, reflection, or maybe NDepend I could spit out a nice HTML page (reversing the underscores back to spaces) and form complete sentences on my test names. This can be a good device to use with business users or other developers as you're trying to specify intent for the tests. I have to admit it beats the heck out of this:

ShouldBeAbleToRespondToEventsFromFrameworkTimer

Doesn't it?

Wrap up

The first day has been a whirlwind for the team as they're just soaking everything in and making sense of it. For me, I'm focusing on using my keyboard more and getting more ReSharper shortcuts embedded into my skull. I've now got MbUnit to go plow through, check out the other fixtures and see what makes it tick and take the plunge from NUnit if it makes sense (which I think it does as I can still use my beloved TestDriven.NET with MbUnit as well!).

More Nothin

JP is doing this 5-day intensive course that stretches people to think outside the box of how they typically program and delve into realms they have potentially not event thought about yet, about one per month. For the next little while (he's a busy dude) he's going to be travelin' dude. In October he'll be in New York City. In September he'll be in London, England and other locations are booked up into the new year.

If you want to find out more details about the course itself, check out the details here and be sure to get on board with the master.

-

Project Structure - By Artifact or Business Logic?

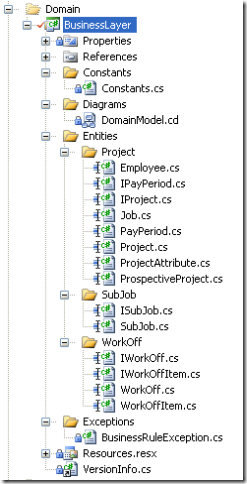

We're currently at a crossroads about how to structure projects. On one hand we started down the path of putting classes and files into folders that made sense according to programming speak. That it, Interfaces; Enums; Exceptions; ValueObjects; Repositories; etc. Here's a sample of a project with that layout:

After some discussion, we thought it would make more sense to structure classes according to the business constructs they represent. In the above example, we have a Project.cs in the DomainObject folder; an IProject.cs interface in the interface folder; and a PayPeriod.cs value object (used to construct a Project object) in the ValueObjects folder. Additional objects would be added so maybe we would have a Repository folder which would contain a ProjectRepository.

Using a business aligned structure, it might make more sense to structure it according to a unit of work or use case. So everything you need for say a Project class (the class, the interface, the repository, the factory, etc.) would be in the same folder.

Here's the same project as above, restructured to put classes and files into folders (which also means namespaces as each folder is a namespace in the .NET world) that make sense to the domain.

It may seem moot. Where do I put files in a solution structure? But I figured I would ask out there. So the question is, how do you structure your business layer and it's associated classes? Option 1 or Option 2 (or maybe your own structure). What are the pros and cons to each, or in the end, does it matter at all?